At the beginning of 2015, I wrote about a patent that told us it would influence search results by media that you may have listened to before (radio, television, movies, and so on). That post was about Google Media Consumption History Patent Filed. I was reminded of that media consumption history patent by one about “environmental information” that was just recently granted at the United States Patent and Trademark Office (USPTO) yesterday. I was also reminded of a patent that described Google Glass being able to recognize songs, which I wrote about in Google Glass to Perform Song Recognition, and Play ‘Name that Tune’?

Related Content:

Imagine watching a movie on TV, and asking your phone, “who is the actor starring in this movie?”

The patent tells us that the process that it follows might involve using “environmental information, such as ambient noise,” to help answer a natural language query. The patent puts this fairly straightforward:

For example, a user may ask a question about a television program that they are viewing, such as “What actor is in this movie?” The user’s mobile device detects the user’s utterance and environmental data, which may include the soundtrack audio of the television program. The mobile computing device encodes the utterance and the environmental data as waveform data, and provides the waveform data to a server-based computing environment.

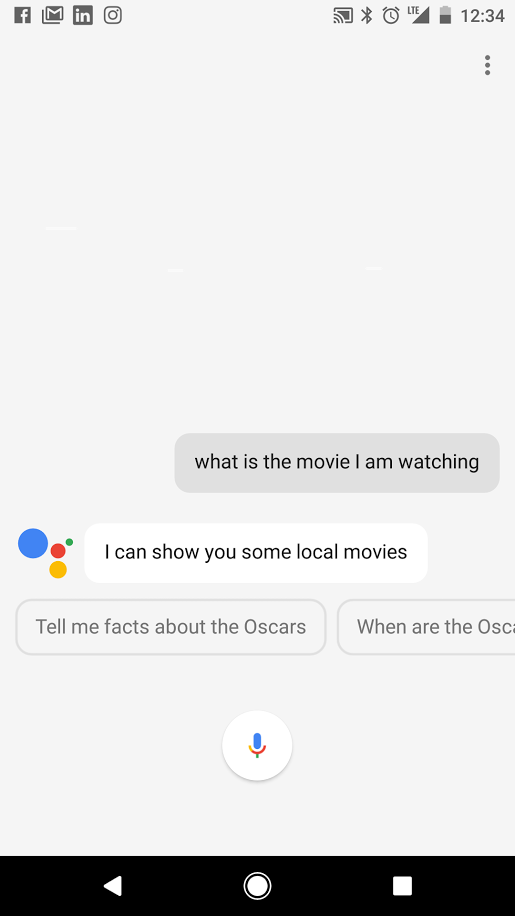

I am going to try this out using environmental information to see if it can give me an answer.

The patent describes the actual process behind its operation in more technical detail:

The computing environment separates the utterance from the environmental data of the waveform data and then obtains a transcription of the utterance. The computing environment further identifies entity data relating to the environmental data and the utterance, such as by identifying the name of the movie. From the transcription and the entity data, the computing environment can then identify one or more results, for example, results in response to the user’s question. Specifically, one or more results can include an answer to the user’s question of “What actor is in this movie?” (e.g., the name of the actor). The computing environment can provide such results to the user of the mobile computing device.

I will be surprised if this query works, but it also reminds me of a patent that tells me I could stand in front of a local landmark and relying upon location-based information, Google might be able to identify that landmark, as I described in the post: How Google May Interpret Queries Based on Locations and Entities (Tested)

I turned up the audio on my TV and I asked, “Who is the Actor in the movie I am watching?”

Answer:

The actor I was asking about was Tom Hanks, and the movie was “Captain Philips.” Google didn’t appear to be able to identify the movie I was watching from the audio soundtrack quite yet.

The environmental information patent is:

Answering questions using environmental context

Inventors: Matthew Sharifi and Gheorghe Postelnicu

Assignee: Google Inc. (Mountain View, CA)

United States Patent 9,576,576

Granted: February 21, 2017

Filed: August 1, 2016

Abstract

Methods, systems, and apparatus, including computer programs encoded on a computer storage medium, for receiving audio data encoding an utterance and environmental data, obtaining a transcription of the utterance, identifying an entity using the environmental data, submitting a query to a natural language query processing engine, wherein the query includes at least a portion of the transcription and data that identifies the entity, and obtaining one or more results of the query.

Take Aways

The process described in this patent doesn’t seem to be working at this point, but it could be sometime soon. Likewise, the patent that you could use location-based information to identify a landmark doesn’t seem to be working yet either, but both patents take advantage of the sensors built into phones and make that information part of a query. I find myself thinking of Star Trek’s Tricorder with features like using media and location built into queries. I suspect that we will see more useful features from phones as the Internet of Things comes into being, and we will be able to communicate with many devices in the future.

Search News Straight To Your Inbox

*Required

Join thousands of marketers to get the best search news in under 5 minutes. Get resources, tips and more with The Splash newsletter: