Table of Contents

Added (2020-02-23) Questions and Answers: A few questions about this Website Representation Vectors patent and some things about it, that I wanted to address.

1. The “Medic” update – that Barry Schwartz named in August of 2018 because it appeared to affect medical websites, also affected other types of websites. This patent application, filed in August of 2018, notes that it covers a range of industries, including health and artificial intelligence sites as examples. It uses an example about authors of health sites being doctors as experts, medical students as apprentices, and laypeople as nonexperts, which is why I asked a Go Fish Digital graphic designer for the masthead image for this post showing one of each of those. Different industries are in this patent and different levels of expertise. I chose to have an illustration that reflected the “medic” aspect of the process from the patent because I believed that it was an accurate reflection of what the patent covers

Related Content:

2. Quality Scores – The patent explains how it might further classify websites based upon whether they meet thresholds based upon quality scores. The patent does not specifically define a “quality score” specifically, but Google has several patents about quality scores for websites. A great page from Google about what a high-quality website includes is a Google blog post from Amit Singhal: More guidance on building high-quality sites.

3. Rankings of Results – How might sites rank under the process from this patent? Queries from specific knowledge domains (covering specific topics) might return results using classified sites as being from the same Knowledge domain. For instance, a medical query such as what are the symptoms of mononucleosis from a medical knowledge domain is best answered by a site classified as being from a medical knowledge domain. The patent also tells us that part of the purpose behind this patent is to limit possible results pages based on classifications involving industry and expertise that meet sufficient quality thresholds. Rank those pages based upon relevance and authority scores:

0024] The search results are ranked based on scores related to the resources identified by the search results, such as information retrieval (“IR”) scores, and optionally a separate ranking of each resource relative to other resources (e.g., an authority score). According to the order, the search results are ordered according to these scores and provided to the user device according to the order.

Classification of Websites

Google tells us that they may use Website Representation Vectors to classify sites based upon features found on those sites.

This post is about a new Google patent application filed in August of 2018 and published at the World Intellectual Property Organization (WIPO) last week.

The patent application uses Neural Networks to understand patterns and features behind websites to classify those sites.

This website classification system refers to “a composite-representation, e.g., vector, for a website classification within a particular knowledge domain.”

Those knowledge domains can be topics such as health, finance, and others. Sites classified in specific knowledge domains can have an advantage in using that classification to return search results as they respond to receiving a search query.

Those website classifications can be more diverse than representing categories of websites within knowledge domains. The patent breaks the categories down much further:

For instance, the website classifications may include the first category of websites authored by experts in the knowledge domain, e.g., doctors, the second category of websites authored by apprentices in the knowledge domain, e.g., medical students, and a third category of websites authored by laypersons in the knowledge domain.

I am reminded of discussions in the SEO Industry about the Google Quality Raters Guidelines and references in it to E-A-T or Expertise, Authority, and Trustworthiness. The Guidelines point out Health sites with different levels of E-A-T, much like the classifications from this new Google patent application about Website representation vectors:

High E-A-T medical advice should be written or produced by people or organizations with appropriate medical

expertise or accreditation. Write or Produce High E-A-T medical advice or information in a professional style and should be edited, reviewed, and updated regularly.

The Guidelines tell us that there are sites created by people with not as much expertise on topics:

It’s even possible to have everyday expertise in YMYL topics. For example, there are forums and support pages for people with specific diseases. Sharing personal experience is a form of everyday expertise. Consider this example.

Here, forum participants are telling how long their loved ones lived with liver cancer. This is an example of sharing

personal experiences (in which they are experts), not medical advice. Specific medical information and advice (rather

than descriptions of life experiences) should come from doctors or other health professionals.

The classifications include an expert level of sites in the health domain, an apprentice level of sites, and a layperson level of sites.

These classifications come from different levels of expertise. This patent tells us that it is ranking pages based on authority too, but says nothing about trustworthiness, so it isn’t ranking sites completely based on E-A-T. This process captures two aspects of E-A-T, so it can fulfill part of the aim of the Quality Raters Guidelines by allowing human evaluators to have sites that rank well, exhibiting high levels of authority and expertise.

Also, if this process limits the number of sites that Google has to return search results from based upon which knowledge domain they might be in, it does mean that Google is searching through fewer sites to return results than Google’s entire index of the web. Let’s look at the process behind this patent application in a little more depth.

It classifies many websites into particular knowledge domains, and it tries to find different levels of sites within those particular knowledge domains:

- Receiving representations of websites and quality scores that represent quality measures of sites relative to other sites

- Classifying as first websites, each site having quality scores below a first threshold, at least one of the number of sites having a quality score below the first threshold

- Classifying as second websites, each of the sites having quality scores above a second threshold greater than the first threshold, at least one of the number of websites having a quality score greater than the first threshold

- Generating a first composite representation of the websites classified as the first websites

- Generate a second composite representation of the websites classified as the second websites

- Receive a representation of another website

- Determining a first measure of the difference between the first composite representation and the representation

- Determining the second measure of the difference between the second composite representation and the representation

- Based on the first measure of difference and the second measure of difference, classify the other website as one the first websites, the second websites, or as third websites that are not classified as either the first websites or second websites

Queries Request Responses from Particular Knowledge Domains

The patent application tells us that its process includes using terms from the query to understand that the query requests responsive data from a particular knowledge domain.

It may search for responses from that particular knowledge domain. The process involves:

- Generating, from the authoritative data sources, preprocessed responses to future queries

- Receiving, after generating the preprocessed responses, a query determined to or show the particular knowledge domain

- In response, responding to the query with one of the preprocessed response

Advantages Of this Website Representation Vectors Approach

The Search System may select, search, or both, data for only websites with a particular classification, reducing computer resources necessary to find search results, e.g., by not selecting, searching, or both, any website irrespective of classification. This can:

- Reduce the amount of storage needed to store data for potential search results, e.g., may need only data storage for websites with the particular classification

- Reduce many websites analyzed by the search system, e.g., limiting a search to sites with the particular classification

- Reduce network bandwidth used to provide search results to a requesting device

- Address potential problems with earlier systems, such as higher use of bandwidth, memory, processor cycles, power, or a combination of two or more of these

- Improve search results pages generated by a search system by including identification of only sites with a particular classification, e.g., a qualitative classification, in generated search results pages

- Use characteristics learned from existing sites to classify previously unseen websites without requiring user input for the classification

- Detect websites that are more likely responsive to queries for a knowledge domain, e.g., are more likely authoritative for the knowledge domain, by classifying before unseen websites

- Use a composite representation based upon existing website classifications, which means the characteristics used by the classification are not limited by human discernible characteristics and can be any characteristic that can be learned by analysis of the website

Note that it is helping to identify sites that are authoritative for different knowledge domains.

This Website Representation Vector Patent Application is at:

Website Representation Vector to Generate Search Results and Classify Website

Publication number: WO2020033805

Applicants: GOOGLE LLC

Inventors: Yevgen Tsykynovskyy

Publication Number WO/2020/033805

Filed: August 10, 2018

Publication Date February 13, 2020

Abstract:

Methods, systems, and apparatus, including computer programs encoded on computer storage media, use website representations to generate, store, or both, search results. One of the methods includes receiving data representing each website in the first plurality of websites associated with a first knowledge domain of a plurality of knowledge domains and having a first classification; receiving data representing each website in the second plurality of websites associated with the first knowledge domain and having a second classification; generating a first composite-representation of the first plurality of websites; generating a second composite-representation of the second plurality of websites; receiving a representation of a third website; determining a first difference measure between the first composite-representation and the representation; determining a second difference measure between the second composite-representation and the representation; and based on the first difference measure and the second difference measure, classifying the third website.

Data From the Web Classification System

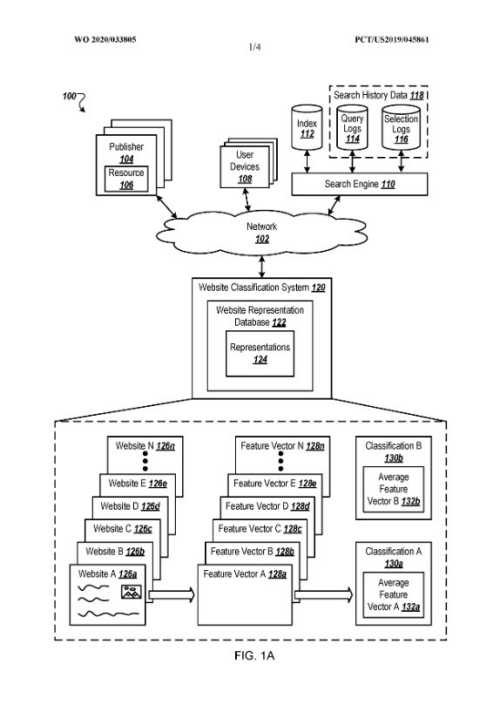

The search engine may use data from this website representation vectors classification system to return search results.

That classification system may use representations for each of many websites A-N and use the representations to determine a classification for each of the many websites A-N.

The search engine decides to use classification for a search query to choose a category of websites with the same or a similar classification.

It may return search results from that category of sites.

The classifications of sites depend on the features that the sites contain.

Classification of Websites in the Website Representation Vectors Patent

This was the part of the patent’s description that I was most interested in finding.

It starts by telling us that this website’s representation vectors classification system could use any appropriate method to generate classifications, which provides Google with a lot of flexibility.

But then it goes into more detail by telling us that classification depends on content from websites to generate representations of those sites.

That content can include:

- Text from the website

- Images on the website

- Other website content, e.g., links

- Or a combination of two or more of these

The patent then provides details about how a neural Network gets involved:

The website classification system may use a mapping that maps the website content for website A to a vector space representing a representation for website A.

For instance, the website classification system may use a neural network representing the mapping to create a feature vector A representing website A using the content of website A as input to the neural network.

Labels Used in Website Representation Vectors

Basing website classification may require using labels. The labels:

- May be alphanumeric, numerical, or alphabetical characters, symbols, or a combination of two or more of these

- Can state a type of entity that had the corresponding website published, such as a non-profit or a for-profit business

- My show an industry described on the a site, such as about artificial intelligence or education

- May state a type of person who authored a site, such as a doctor, a medical student, or a layperson

- Could also be scores that represent a website classification

The Scores for classifications could be used:

- To meet different thresholds to meet categories

- May be specific for a particular knowledge domain

- To classify a site to cover more than one knowledge domain

- To select sites responsive to many queries for particular knowledge domains

- With authoritativeness of the respective website to the particular knowledge domain

- Or both

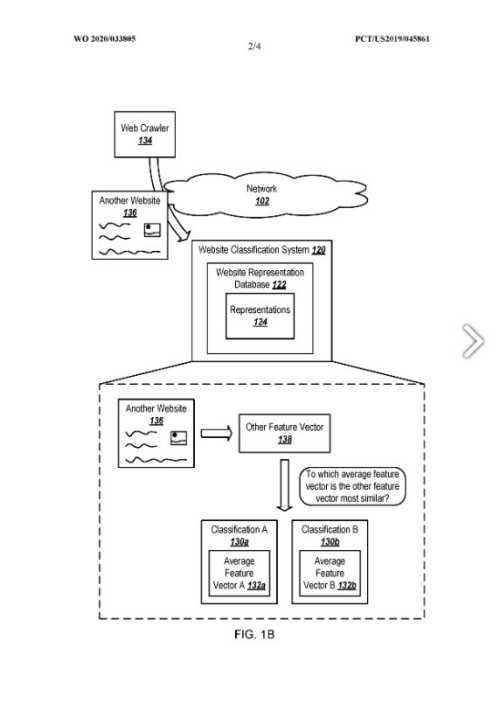

Input Data used to Classify sites may look at things such as:

- A position of particular words about each other, e.g., that the word “artificial” is generally near or next to the word “intelligence.”

- Particular phrases included in the website

- For each of the classifications A-B, a measure of difference, or a similarity measure, that represents a similarity between the respective classification and the other website

- The classification A-B that is most similar

- The classification A-B with the highest similarity measure, or with the shortest distance between the other feature vector and the respective average feature vector A-B, to name a few examples

- A ratio between two similarity measures to select a classification for the other website

This website representations vectors patent tells us several other ways data may go through during the classification process.

Quality Scores indicating a classification of a site, may be measures of:

- Authoritativeness

- Responsiveness for a particular knowledge domain

- Another property of the website

- Or a combination of two or more of these

Takeaways from this Website Representation Vectors Classification Approach

- Text, images, and links within websites determine how they are classified

- Quality Scores of Classified Sites may indicate authoritativeness or how responsive a site may be for a particular knowledge domain, or both

- Labels used to classify sites could include information about the entity behind a site, the industry described in the site, and the type of person who authored a site

- A site might cover more than one knowledge domain

Last Updated February 23, 2020

Search News Straight To Your Inbox

*Required

Join thousands of marketers to get the best search news in under 5 minutes. Get resources, tips and more with The Splash newsletter: