Table of Contents

What is Semantic Topic Modeling?

I came across some interesting papers at the Google Research pages on Semantic Topic modeling that I thought was worth sharing. One reminded me of Google’s use of co-occurrence of phrases in top-ranking pages for different queries and how that could be used to better understand thematic modeling on a site. I wrote about that in a post on the Go Fish Digital blog, which I called, Thematic Modeling Using Related Words in Documents and Anchor Text

Related Content:

The paper that reminded me of that post was this one:

Improving Semantic Topic Clustering for Search Queries with Word Co-Occurrence and bigraph co-clustering. The paper is by Jing Kong, Alex Scott, and Georg M. Goerg

Techniques for Semantic Topic Modeling

The abstract from the paper tells us, “We present two novel techniques that can discover semantically meaningful topics in search queries: (i) word co-occurrence clustering generates topics from words frequently occurring together; (ii) weighted bigraph clustering uses URLs from Google Search Results to induce query similarity and generate topics.”

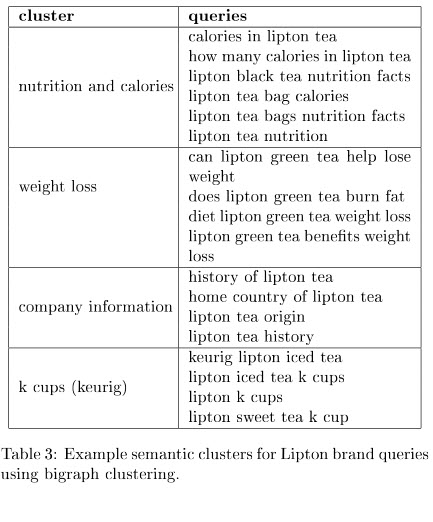

The paper provides examples from sets of pages about Lipton Brand products and queries involving makeup and cosmetics.

Learning from Query Information

Query information can provide interesting and helpful business insights. Knowing how often people search for particular products and brands can tell us how many people are interested in those products, as well as what people might associate with those brands. For example, clustering products into categories such as “beauty products” can give us insights into what people are searching for in popular trends.

One of the difficulties that you could run across in trying to learn from topics that appear like this is that they show up in shortened contexts, such as tweets and queries, which means that you won’t learn from other terms that appear near them or with them. For instance, the word “Lipton” might show up frequently near words that are related to “tea” and to “soup”.

Word Co-occurrence is when the same terms or phrases appear frequently (not counting stop words) in documents that might rank highly for a particular query term, meaning that those words are likely semantically related to the terms they rank highly for.

A biterm clustering approach can involve taking words from lots of documents that rank for a certain query, and putting them all together, and pulling out two-word terms that appear in those documents (and clustering them by how frequently they appear).

Purpose of Semantic Topic Modeling Techniques

The paper details these word co-occurrence and weighted bi-graph clustering approaches and how they are used.

Remember, the point of this paper is to describe how these two different approaches can help better understand topics that might be somewhat related are being displayed. When “Lipton” is being talked about, is it in the context of “tea” or “soup?”

A range of brand-related queries can show off topics that might be related to those queries. We are shown this table to give us some examples:

In the discussions section of the semantic topic modeling paper, they tell us which approach worked best in different circumstances. It was an interesting conclusion and left me wanting to do more research.

Insights from Paper Citations

Fortunately, the citations in this paper looked like they might be worth exploring, so I found links for the pages most of them appear upon, and some excerpts from those that give a sense of those. Some of these appear to have been around for a while, and some appear to discuss topics that you may have seen referred to in the past. Some of them specifically apply to social media which is well known for having sparse amounts of text, such as Twitter. Many of those seemed interesting enough that I wanted to find links to them, and read more (and thought they were worth sharing)

Agglomerative Clustering of a Search Engine Query Log (2000)

The clustering strategy applied here follows from two related observations. First, the fact that users with the same informational need may phrase their query differently to a search engine, cheetahs, and wild cats, but select the same URL from among those offered to fulfill that need suggests that those queries are related. Second, the fact that after issuing the same query, users may visit two different URLs www.funds.com and www.mutualfunds.com, is evident that the URLs are similar.

In this paper we consider the problem of modeling text corpora and other collections of discrete data. The goal is to find short descriptions of the members of a collection that enable efficient processing of large collections while preserving the essential statistical relationships that are useful for basic tasks such as classification, novelty detection, summarization, and similarity and relevance judgments.

We illustrate this idea with a concrete example. Consider a travel brochure with the sentence “In

the near future, you could find yourself in .” Both the low-level syntactic context of a word and its document context constrain the possibilities of the word that can appear next. Syntactically, it is going to be a noun consistent as the object of the preposition “of.” Thematically, because it is in a travel brochure, we would expect to see words such as “Acapulco,” “Costa Rica,” or “Australia” more than “kitchen,” “debt,” or “pocket.” Our model can capture these kinds of regularities and exploit them in predictive problems.

Co-clustering documents and words using bipartite spectral graph partitioning.

In this paper, we consider the problem of simultaneous or co-clustering of documents and words. Most of the existing work is on one-way clustering, i.e., either document or word clustering. A common theme among existing algorithms is to cluster documents based upon their word distributions while word clustering is determined by co-occurrence in documents. This points to a duality between document and term clustering.

Probabilistic latent semantic indexing

Probabilistic Latent Semantic Analysis is a novel statistical technique for the analysis of two-mode and co-occurrence data, which has applications in information retrieval and filtering, natural language processing, machine learning from text, and in related areas.

Empirical study of topic modeling in Twitter

In Twitter, popular information that is deemed important by the community propagates through the network. Studying the characteristics of content in the messages becomes important for several tasks, such as breaking news detection, personalized message recommendation, friends recommendation, sentiment analysis, and others. While many researchers wish to use standard text mining tools to understand messages on Twitter, the restricted length of those messages prevents them from being employed to their full potential.

A vector space model for automatic indexing

In a Document Retrieval of other document matching environment where stored entities (documents) are compared with each other or with incoming patterns (search requests), It appears that the best indexing property space is one where each entity lies as far away from the others as possible; in these circumstances, the value of an indexing system may be expressible as a function of the density of the object space; in particular, retrieval performance may correlate inversely with space density. An approach based on space density computations is used to choose an optimum indexing vocabulary for a collection of documents. Typical evaluation results are shown, demonstrating the usefulness of the model. Key Words and Phrases: automatic information retrieval, automatic indexing, content analysis, documents

The Mathematical theory of information

The fundamental problem of communication is that of reproducing at one point either exactly or approximately a message selected at another point. Frequently the messages have meaning; that is they refer to or are correlated according to some system with certain physical or conceptual entities. These semantic aspects of communication are irrelevant to the engineering problem. The significant aspect is that the actual message is selected from a set of possible messages. The system must be designed to operate for each possible selection, not just the one which will be chosen since this is unknown at the time of design.

Topics over time: a non-Markov continuous-time model of topical trends

We present results on nine months of personal email, 17 years of NIPS research papers and over 200 years of presidential state-of-the-union addresses, showing improved topics, better timestamp prediction, and interpretable trends.

Twitter-rank: Finding topic-sensitive influential Twitterers

This paper focuses on the problem of identifying influential users of micro-blogging services. Twitter, one of the most notable micro-blogging services, employs a social-networking model called “following”, in which each user can choose who she wants to “follow” to receive tweets from without requiring the latter to give permission first

Dis-criminative Bi-Term Topic Model for Headline-Based Social News Clustering

Social news is becoming increasingly popular. News organizations and popular journalists are starting to use social media more and more heavily for broadcasting news. The major challenge in social news clustering lies in the fact that textual content is only a headline, which is much shorter than the fulltext

A Biterm Topic Model for Short Texts

Uncovering the topics within short texts, such as tweets and instant messages, has become an important task for many content analysis applications. However, directly applying conventional topic models (e.g. LDA and PLSA) on such short texts may not work well. The fundamental reason lies in that conventional topic models implicitly capture the document-level word co-occurrence patterns to reveal topics, and thus suffer from the severe data sparsity in short documents.

Search News Straight To Your Inbox

*Required

Join thousands of marketers to get the best search news in under 5 minutes. Get resources, tips and more with The Splash newsletter: