A task Google has set out to do recently is to respond to answer-seeking queries as I wrote about in the post, What are Good Answers for Queries that Ask Questions?

Sometimes a query is best answered by a list of links to documents found on the web (i.e. “best restaurants in NYC.”) and there are times an actual concise answer would serve a searcher best (When was the Declaration of Independence signed?).

We see Google try to answer questions in answer boxes at the tops of organic results in SERPs. A paper published in November of last year from the Google Research Publications database shows us better questions and how those are shaped, and how Google may engage in rewriting questions The paper is: How to Ask Better Questions? A Large-Scale Multi-Domain Dataset for Rewriting Ill-Formed Questions. We also see Google showing us People Also Ask Questions that they are providing answers to, and links to the sources of those answers.

Related Content:

The focus of this paper is about “how to ask better questions?” and about rewriting questions to make them better questions The abstract tells us more and is:

We present a large-scale dataset for the task of rewriting an ill-formed natural language question to a well-formed one. Our multi-domain question rewriting MQR dataset is constructed from human contributed Stack Exchange question edit histories. The dataset contains 427,719 question pairs which come from 303 domains. We provide human annotations for a subset of the dataset as a quality estimate. When moving from ill-formed to well-formed questions, the question quality improves by an average of 45 points across three aspects. We train sequence-to-sequence neural models on the constructed dataset and obtain an improvement of 13.2% in BLEU-4 over baseline methods built from other data resources. We release the MQR dataset to encourage research on the problem of question rewriting.

The paper includes a summary of what it contributes to solving the problem of rewriting questions, and lays those contributions out like this:

- The task of rewriting questions, which involves changing textual ill-formed questions to much better well-formed questions while preserving their semantics or meanings.

- This requires building a large-scale multi-domain question rewriting dataset (MQR) from human-generated Stack Exchange question edit histories. The development and test sets are considered to be of high quality according to human annotations. They have released the MQR dataset to encourage research on the question rewriting task.

- They have benchmarked several neural models trained on the MQR dataset, which have been trained with other question rewriting datasets, and other paraphrasing techniques. They tell us that models trained on the MQR and Quora datasets combined followed by grammatical error correction perform the best in the MQR question rewriting task.

After reading their motivations for releasing this paper and that they were hoping to encourage other research about this question rewriting process, it made sense to blog about this project and share their paper.

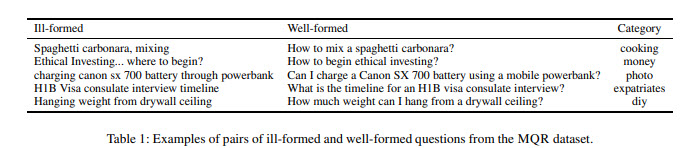

The paper points out examples of ill-formed and well-formed questions from the MQR Dataset:

Evaluating Well-Formed Questions

The paper also looks at related research, and also how it evaluates changes when rewriting questions. When looking at well-formed questions, it may look at:

1. Is the question grammatically correct?

2. Is the spelling correct? Misuse of third-person singular

or past tense in verbs is considered grammatical errors

instead of spelling errors. A missing question mark at the

end of a question is also considered as spelling errors.

3. Is the question explicit, rather than a search

query, a command, or a statement?

In looking at pairs of ill-formed and well-formed questions, another aspect of the analysis is whether or not they both seem to be semantically similar, or carry the same meaning.

Conclusion About Rewriting Questions

This paper from Google explores rewriting poorly written questions, and how that rewriting process is evaluated. It provides some insights into how question rewriting can be done to be useful to a wider audience. It also shows us that Google is making an effort to show off well-formed questions and provide answers to those.

When I was reading it, I was reminded of a paper from Google called Biperpedia: An Ontology for Search Applications. One of the lines from the Biperpedia paper that stuck out to me was a statement that they were tracking what they referred to as “canonical queries” or the best way to phrase queries that they were finding in query logs. I had wondered if that might include finding the best way to phrase questions that searchers might ask when searching, and it may have. Then again, that issue may have been one that they wanted to do more research on, and this paper from last November was one way of trying to better understand and re-write questions that might appear as queries.

When we see a question appearing as a featured snippet or a people also ask question, it probably provides the most value to searchers if it is a well-formed question, rather than an ill-formed one.

This shows us that Google isn’t just looking for answers to questions, but also better questions to show answers to when they do show featured snippets and people also ask questions.

The steps the paper describes taking to evaluating well-formed questions are probably ones worth following when looking at the questions that you might include on your pages as ones to also provide answers to.

Providing Better questions is the first step towards being seen as the source of answers for those questions.

Search News Straight To Your Inbox

*Required

Join thousands of marketers to get the best search news in under 5 minutes. Get resources, tips and more with The Splash newsletter: