Table of Contents

Mapping Image Queries at Google

Many people have phones with cameras in them. Searching with photos seems like it would become more popular. A recent patent from Google is about searching using images, granted in November of last year.

I saved the patent to write about because I have been writing about some other approaches from Google involving image search. Google does have a history of trying to better understand what images might contain.

Related Content:

I wrote last year about Google adding semantic categories with ontologies to image search results. That post was Google Image Search Labels Becoming More Semantic?

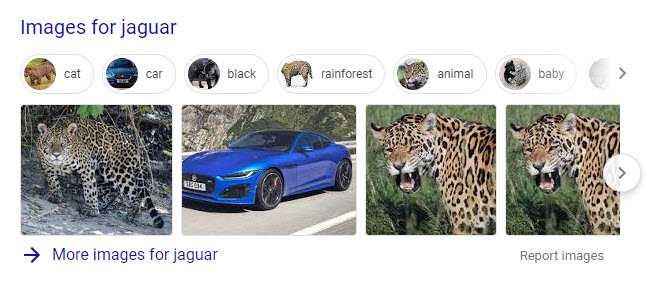

Imagine my surprise when I searched for [jaguar], expecting to see some images in the search results of cats like Google used to show in Universal Search results. They used to mix the results they showed o include images, news, local, videos, and web results for a query. Results for [jaguar] included cats and cars, showing categories above them related to a [jaguar] search and an ontology related to that search:

Under this new patent, someone might search using an image – from a photograph or on their computer.

Google has released an App about photography search – Google Lens.

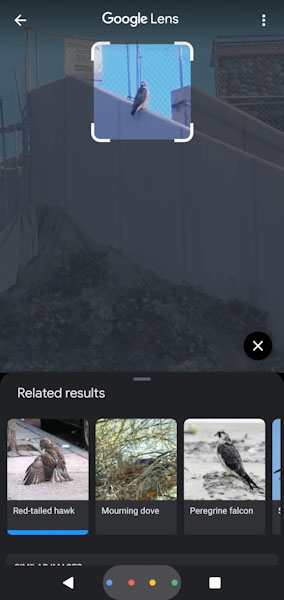

Google Lens recognized that the bird in this photo that I took last week is a hawk:

It allowed me to select an object in my photograph for it to attempt to identify.

This new patent doesn’t mention Google Lens. I wrote a post in the past on a patent about Google Goggles, and the improvements that might happen to that App in New Visual Search Photo Features from Google. Google Goggles ended up shut down as an App. Fortunately. it seems that Google Lens now has many of the same features, including focusing on an object included in a picture (like I did in my example with the Hawk.)

In response to a query image search, a search engine may annotate an image with image labels (query image labels that tag features in the query image.)

If I search with an image of me, Google recognizes the image of me (or reads the file name of the image, and takes it from there.):

Google tells us how an image might be labeled during an image search:

The query image labels tag coarse-grained features of the query image and, in some cases, fine-grained features of the query image. Based on the query image labels, the system identifies one or more entities associated with the query image labels, e.g., people, places, television networks, or sports clubs, and identifies one or more candidate search queries using the identified one or more entities. The system uses the identified entities and query image labels to bias the scoring of candidate search queries towards those that are relevant to the user, independent of whether the query image is tagged with fine-grained labels or not. The system provides one or more relevant representative search queries for output.

(Added 1/16/2020 – Another post about a recent patent from Google about the labels it is putting on Images is How Google May Annotate Images to Improve Search Results

The patent tells us about how “innovative aspects” may be embodied that include the actions of:

- Receiving a query image

- Receiving one or more entities associated with the query image

- Identifying, for the entities, one or more candidate search queries pre-associated with the entities

- Generating a respective relevance score for each candidate search queries

- Selecting, as a representative search queries for query images, candidate search queries based on therespective relevance scores

- Providing a representative search query in response to receiving the query image

Relevance Scores for Entities in Images

So, how do those relevance scores for entities get calculated?

The patent description does provide details about such a score:

A respective relevance score for each of the candidate search queries involves:

- Determining whether the context of the query image is a match for the candidate search query

- Generating a respective relevance score for the candidate search query

“Determining whether the context of the query image is a match for the candidate search query” means whether an image has a place associated with matching the search query (taking photos of buildings or statues at places those are known for.)

That determination can also may means receiving a natural language query, and generating a respective relevance score for each of the candidate search queries based at least on the received natural language query.

How is this done?

For each of the candidate search queries:

- Generating a search results page using the candidate search query

- Analyzing the generated search results page to determine a measure indicative of how interesting and useful the search results page is

- Based on the determined measure, generating a respective relevance score for the candidate search query

In some cases, generating a respective relevance score for each of the candidate search queries comprises:

- Determining a popularity of the candidate search query

- Based on the determined popularity, generating a respective relevance score for the candidate search query

Image Query Label Scores

In some other cases, receiving one or more entities that are associated with the query image comprises:

- Obtaining one or more query image labels

- Identifying, for one or more of the query image labels, one or more entities that are pre-associated with the one or more query image labels

The patent tells us about a couple of different kinds of image labels:

The one or more query image labels comprise fine-grained image labels, or they may comprise coarse-grained image labels.

The process behind the patent may also involve generating a respective label score for each of the query image labels.

In some implementations, a respective label score for a query image label is based at least on a topicality of the query image label.

A respective label score for a query image label may be based at least on how specific the label is.

A respective label score for a query image label could also be based at least on the reliability of a backend by which the query image label is obtained from and a calibrated backend confidence score.

Selecting a particular candidate search query based at least on the candidate query scores and the label scores comprises:

- Determining an aggregate score between each label score and associated candidate query score

- Ranking the determined aggregate scores

- Selecting a particular candidate search query that corresponds to a highest ranked score

A selection of a particular candidate search query can be based at least on the candidate query scores following a process of:

- Ranking the relevance scores for the candidate search queries

- Selecting a particular candidate search query that corresponds to a highest ranked score

The patent behind mapping Images to search queries can be found at:

Mapping images to search queries

Inventors: Matthew Sharifi, David Petrou, and Abhanshu Sharma

Assignee: Google LLC

US Patent: 10,489,410

Granted: November 26, 2019

Filed: April 18, 2016

Abstract

Methods, systems, and apparatus for receiving a query image, receiving one or more entities that are associated with the query image, identifying, for one or more of the entities, one or more candidate search queries that are pre-associated with the one or more entities, generating a respective relevance score for each of the candidate search queries, selecting, as a representative search query for the query image, a particular candidate search query based at least on the generated respective relevance scores and providing the representative search query for output in response to receiving the query image.

This specification describes a system for generating text search queries using image-based queries (a search with a photograph).

This search system combines a set of visual recognition results for the received image-based query with search query logs and known search query attributes to generate relevant natural language candidate search queries for the input image-based search query.

The natural language candidate search queries are biased towards search queries that:

- Match the user’s intent

- Generate interesting or relevant search results pages

- Or are determined to be popular search queries

Combined Image Queries with Natural Language Queries

In some cases, a search system may receive both an image-based search query along with a natural language query (text that may have been spoken and derived using speech recognition technology)

The search system may combine a set of visual recognition results for the received image-based search query with search query logs and known search query attributes to generate relevant natural language candidate search queries for the input image-based search query.

The natural language candidate search queries are biased towards search queries that

- Match the user’s intent

- Generate interesting or relevant search results pages

- Are determined to be popular search queries

- Include or are associated with the received natural language query

Coarse grained image features and fine grained image features

The patent tells us that it might use both coarse-grained image features and fine-grained image features to map an image to a specific search query. So what is the difference between the two?

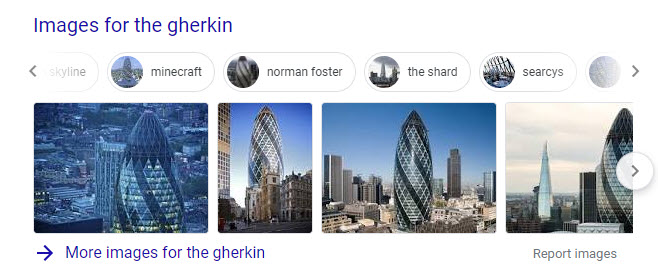

As an example, the query image may include a picture of a book on a table. In such a case, a coarse-grained feature of the query image may be the book and a fine-grained feature may be the title or genre of the book. In the example query image depicted in FIG. 1, coarse-grained query image features may include “city” or “buildings,” and fine-grained features may include “London” or “The Gherkin.”

The patent tells us that it might focus upon objects or features labeled by an image recognition system as being:

- Large (taking up a high amount of surface area of the image

- Small (taking up a small amount of surface area of the image

- Central (centered in the middle of the image)

A query image may include a picture of a book on a table.

In that picture, a large image feature may be the table and a small image feature may be the book.

The book may be a central image feature.

A Combined Image Query and Natural Language Query

In a search for the building the Gherkin (see in the heading image for this post), someone may submit a photo of the building, and include a natural language query such as:

- “What style of architecture is The Gherkin?”

- “How tall is the Gherkin?”

- “Who occupies The Gherkin?”

- “Driving directions to The Gherkin”

Search Results in Response to an Image Search

An example SERPs page may show links and snippets to results on different sites related to the image being searched as well as a knowledge panel that provides “general information relating to the entity ‘The Gherkin,’ such as the size, age, and address of the building.”

The patent also tells us that search results may also:

- Show off more candidate search queries that are pre-associated with the one or more entities

- Generate respective scores for each of the candidate search queries

- Select a representative search query from the candidate search queries based on the generated scores

The patent also tells us that an image or video can be submitted as part of a search with a written natural language query or even a spoken natural language query (and both video and spoken queries are considered under this patent.)

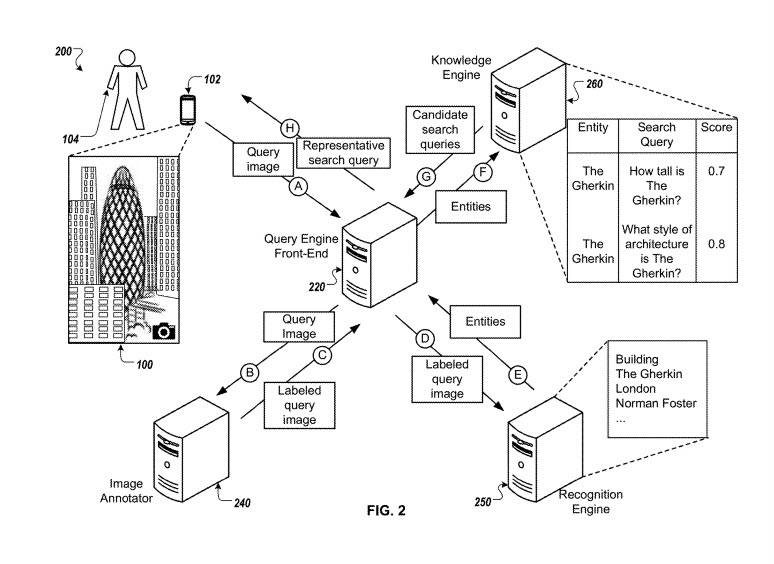

The patent tells us about an image annotator which can add query image labels (visual recognition results) for a user input query image. I find this interesting because I had Claire Carlile point out to me yesterday the fact that Google has started running their Image Labeler program again. It had been previously run as a game based upon an ESP game by Luis von Ahn, who had invented the Captcha program that Google also used. The use of the game is to help Google better understand which labels should apply to images by crowdsourcing human annotation of images.

The patent tells us more about image annotation, involving coarse-grained query image labels and fine-grained query image labels:

During operation (B), the image annotator0 can receive the data associated with the user-input query image and can identify one or more query image labels, e.g., visual recognition results, for the user-input query image. For example, the image annotator may include or be in communication with one or more back ends that are configured to analyze a given query image and identify one or more query image labels. The image annotator may identify fine-grained query image labels, (image labels that label specific landmarks, book covers or posters that are present in a given image), and/or coarse-grained image labels (image labels that label objects such as a table, book or lake.) For example, based on receiving the data associated with user-input photograph 206, the image annotator may identify fine-grained query image labels such as “The Gherkin,” or “London” for the user-input photograph 206 and may identify coarse-grained query image labels such as “Buildings,” or “city.” In some implementations, image annotator may return query image labels that are based on OCR or textual visual recognition results. For example, image annotator may identify and assign a name printed on a street sign that is included in the query image, or the name of a shop that is included in the image, as query image labels.

A query for a building could use image recognition to identify buildings such as the:

- “Eiffel Tower”

- “Empire State Building”

- “Taj Mahal”

The fine-grained label “The Gherkin” for the header image for this post may be found, and the recognition engine may identify entities such as “Norman foster,” (architect) “Standard Life,” (tenant) or “City of London” (location) as being associated with the user-input query image based on comparing the query label “The Gherkin” to terms associated with a set of known entities.

A known set of entities may be accessible to the recognition engine using a database that can identify those.

Based on identifying entities associated with the labeled user-input query image, the recognition engine may transmit data that identifies the entities and any additional context terms to the query engine. We see such associations going on it the labels for images that appear in SERPs for the [gherkin], such as a mention of Norman Foster, and Searcys – a restaurant in the building:

This mapping image patent shows us how entities in the knowledge graph can be mapped together to reflect related entities. We are told about a knowledge engine that connects these entities doing an image query:

During operation (F), the query engine front-end can receive the data identifying the one or more entities, and can transmit the data identifying the entities to the knowledge engine. For example, the query engine front-end can receive information identifying the entities “The Gherkin,” “Norman foster,” “Standard Life,” and “City of London,” and can transmit data to the knowledge engine that identifies “The Gherkin,” “Norman foster,” “Standard Life,” and “City of London.” In some instances, the query engine front-end can transmit the data identifying the entities to the knowledge engine over one or more networks, or over one or more other wired or wireless connections.

It also tells us that candidate search queries (with answers) may be mapped to specific entities, using the Gherkin as an example, again:

The knowledge engine can receive the data identifying the entities and can identify one or more candidate search queries that are pre-associated with one or more entities. In some implementations, the knowledge engine can identify candidate search queries related to identified entities based on accessing a database or server that maintains candidate search queries relating to entities, e.g., a pre-computed query map. For example, the knowledge engine can receive information that identifies the entity “The Gherkin,” and the knowledge engine can access the database or server to identify candidate search queries that are associated with the entity “The Gherkin,” such as “How tall is The Gherkin” or “What style of architecture is the Gherkin?” In some implementations, the database or server accessed by the knowledge engine can be a database or server that is associated with the knowledge engine, e.g., as a part of the knowledge engine, or the knowledge engine can access the database or server, e.g., over one or more networks. The database or server that maintains candidate search queries related to entities, e.g., a pre-computed query map, may include candidate search queries in differing languages. In such cases, the knowledge engine may be configured to identify candidate search queries that are associated with a given entity in a language that matches the user’s language, e.g., as indicated by the user device or by a natural language query provided with a query image.

Google may look at queries that are pre-associated with entities that may be related to query images and provide scores for those queries. The patent shows an example of this:

The query engine front-end can receive the data that includes or identifies the one or more candidate search queries and their respective relevance scores from the knowledge engine and can select one or more representative search queries from the one or more candidate search queries based at least on the relevance scores (by ranking the one or more candidate search queries and selecting several highest scoring search queries as representative search queries.) For example, the query engine front-end may receive data that includes or identifies the candidate search queries “How tall is The Gherkin?” with relevance score 0.7 and “What style of architecture is the Gherkin?” with relevance score 0.8. Based on the relevance scores, the query engine front-end may select the candidate search query “What style of architecture is the Gherkin?” In some implementations, the query engine front-end may select one or more representative search queries from the one or more candidate search queries based on the relevance scores and the label scores received from the image annotator (by aggregating the relevance scores and label scores using a ranking function or classifier.)

Associated Locations and Image Queries

The context of a query image may play a role in determining whether the query image has an associated location that matches the candidate search query.

For example, a photograph of a coat may be understood to have been taken at a shopping mall. The search system may generate higher respective relevance scores for candidate search queries that are related to shopping or commercial results for that image-based upon that context.

Another photo of a coat is perceived to have been taken within the home of the user. The search system may generate higher respective relevance scores for candidate search queries that are related to the weather, such as “do I need my coat today?”

Another example might be understanding the context to be a location corresponding to the current location of the searching device. For example, the search system may determine that an image of flowers is received in a specific town or neighborhood. The search system may generate higher respective relevance scores for candidate search queries that are related to nearby florists or gardening services.

Image Queries that Received One Boxes and Web Answer Cards

For some results, when the relevance score may include a measure of how interesting and useful the search results page might be, the search system may generate higher respective relevance scores for candidate search queries that produce search results pages with one box or web answer cards than candidate search queries that produce search results pages with no one boxes or web answer cards.

Image Queries Takeaways

How Queries are Mapped to Images

When a query consists solely of an image, Google may identify what is in the image, and labels associated with the image. It may try to understand what other entities might be related to what is in the image as well and see if any pre-associated queries are commonly associated with whatever the image might be of, or those related entities. It may use contextual clues such as location as well, to better understand the intent behind the image query.

If the image has a natural language query included with it, either typed or spoken, it may also consider those related entities and pre-associated queries from looking at query logs, and context from location too.

Having access to the semantic categories in image search can provide some clues as to what Google might show in Search Results in response to an image query.

Search News Straight To Your Inbox

*Required

Join thousands of marketers to get the best search news in under 5 minutes. Get resources, tips and more with The Splash newsletter: