Table of Contents

The Google Assistant and Context-Based Natural Language Processing in a Dialog System

I recently wrote about Google Automated Assistant Search Results in a post a couple of weeks ago. The post focused upon how a personal assistant might engage in a dialog with a searcher. This appears to be a topic of concern at Google because another patent from the search engine was just granted that is about natural language processing in dialog with a personal assistant.

Related Content:

Another post that I wrote about that is related was one from April of this year that is about conversational queries that may be submitted to a personal assistant at Google. That post is Conversational Search Queries at Google (Context from Previous Sessions) It’s fascinating to see technology around a subject like this grow and develop.

My post for today is about a patent that was granted on November 19, 2019, within the context of a dialog system, as you see with the conversations that you might have with your google assistant. I’ve been using my Google Assistant more and more every day. My most recent use that has me excited are Google Assistant Routines.

Problems with Dialog Systems Using Natural Language Processing

The patent I am writing about focuses upon dialog systems involving the Google Assistant. In more detail, the patent tells us it is about: “natural language processing (NLP) methods that involve multimodal processing of user requests.” Given Google’s recent disclosure that they are using BERT to understand natural language better, it’s an area that Google is seeing growth in. What makes the process described in this patent challenging is that they involve “processing user requests,” which we are told “are not reasonably understandable if taken alone or in isolation.” When a computer tries to understand Natural Language it faces some challenges, and that is true with the Google Assistant, too.

The way that Google may understand user requests can involve “identifying a speech or environmental context that encompasses the user requests.”

So, that is the topic of this patent and the problem that it is intended to solve.

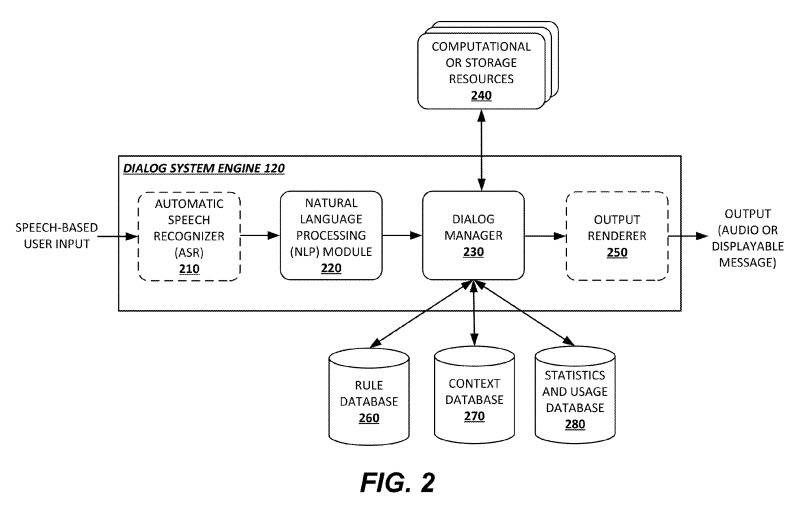

We are given some background information in the patent regarding dialog systems. This is the first patent from Google involving conversational search or spoken search results that I have seen a reference to “dialog systems” in. Here is some of that background:

Conventional dialog systems are widely used in the information technology industry, especially in the form of mobile applications for wireless telephones and tablet computers. Generally, a dialog system refers to a computer-based agent having a human-centric interface for accessing, processing, managing, and delivering information. Dialog systems are also known as chat information systems, spoken dialog systems, conversational agents, chatter robots, chatterbots, chatbots, chat agents, digital personal assistants, automated online assistants, and so forth. All these terms are within the scope of the present disclosure and referred to as a “dialog system” for simplicity.

I was reminded of the Apple patent application for the Intelligent personal Assistant Siri when reading this patent from Google I wrote about that in 2012 and was struck by its description of “Active Ontologies,” which are the “underlying infrastructure behind how the intelligent assistant builds models about data and knowledge.” What is covered in this Google patent isn’t quite the same, but it’s worth looking at how Siri was envisioned being set up when you learn more about how Google Assistant is intended to interact with searchers and users of the Personal Assitant. To go more into depth on how the Google Assistant is intended to work with people here is a little more about the dialog system behind it:

Traditionally, a dialog system interacts with its users in natural language to simulate an intelligent conversation and provide personalized assistance to the users. For example, a user may generate requests to the dialog system in the form of conversational questions, such as “Where is the nearest hotel?” or “What is the weather like in Alexandria?,” and receive corresponding answers from the dialog system in the form of audio and/or displayable messages. The users may also provide voice commands to the dialog system requesting the performance of certain functions including, for example, generating e-mails, making phone calls, searching particular information, acquiring data, navigating, requesting notifications or reminders, and so forth. These and other functionalities make dialog systems very popular as they are of great help, especially for holders of portable electronic devices such as smartphones, cellular phones, tablet computers, gaming consoles, and the like.

There are challenges when it comes to user requests that “conventional dialog systems” have some problems with. For instance:

1. A conversational dialog can cause issues, such as a followup to a user request such as “What is the weather like in New York?” could be a second user request such as “What about Los Angeles?”

2. When a dialog system may be used to control an internal mobile application, followup user commands may not be understandable by the dialog system, such as the command “Next.”

3. When a physical environment is changed such as someone traveling to a new time zone, and a user command might be a request for the time, processing that request may not be processed correctly.

These are some potential issues that have been identified in the patent to tell us that there is a need to be able to address user requests that could change and that the process described in this patent are intended to solve.

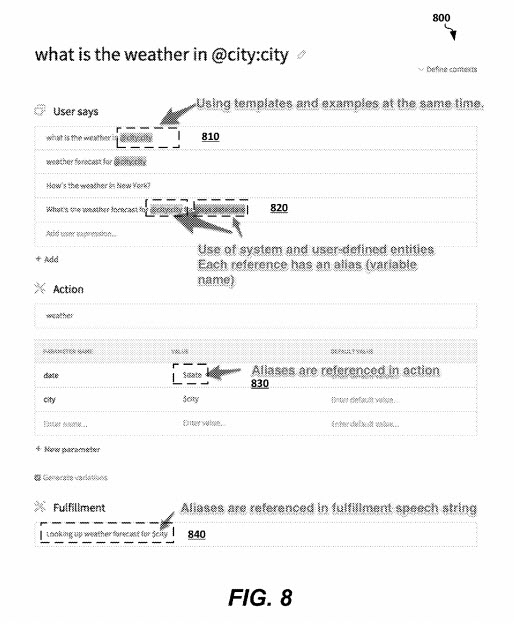

Here is a request that asks about weather at a location, and when a different location my be requested, how that question uses a query template and changes the entity (location) involved:

Before I go into what the patent says about how it might address some of these issues involving user requests, I want to supply a link to the Google Assistant Developer Community, where you can go to learn the most up to date information being discussed and described in that community.

The patent’s description does introduce the issues I wrote about above as a reason from the filing of this recently granted patent, and it tells us about how those problems may be addressed.

What Makes This Approach Context-Based Natural Language Processing?

It begins by telling us that it works to identify contexts that may be related to particular user requests and the processing of those user requests using a Dialog System Engine based on the contexts.

Contexts usually refer to speech contexts, based on a sequence of prior user requests or dialog system answers.

Contexts also refer to environmental contexts, which are based on:

- Geographical locations

- Weather conditions

- User actions

- User motions

- Directions

- Etc.

Sometimes these environmental contexts may involve more than just dialog and can extend to other means of input, such as touch, clicks, and interactions with a device (e.g., in the car–voice, steering wheel controls, touch controls on infotainment center).

The dialog system may include a bunch of rules that provide instructions “on how the Dialog System Engine shall respond to a particular user request received from the Dialog System Interface.”

Those rules may be implemented by using:

- Machine learning classifiers

- Rule engines

- Heuristics algorithms

- Etc.

The Dialog System Engine also uses a context database for speech and environment contexts, and those context expressions may related to “terms, keywords, phrases and/or questions virtually linked with a certain `entity` (i.e., a dialog system element).” The environmental contexts may be defined by the:

- Device of the user

- User profile

- Geographical location

- Environmental conditions

- Etc.

Keep in mind that while this dialog system may be used in devices such as smartphones and smart speakers, it isn’t limited to those and could be found in other devices and places such as cars.

The patent about this dialog system that uses a context-based natural language processing can be found at:

Context-based natural language processing

Inventors: Ilya Gennadyevich Gelfenbeyn, Artem Goncharuk, Pavel Aleksandrovich Sirotin

Assignee: GOOGLE LLC

US Patent: 10,482,184

Granted: November 19, 2019

Filed: March 8, 2016

Abstract

A method for context-based natural language processing is disclosed herein. The method comprises maintaining a plurality of dialog system rules, receiving a user request from a Dialog System Interface, receiving one or more attributes associated with the user request from the Dialog System Interface or a user device, and identifying a type of context associated with the user request based on the user request and the one or more attributes. A context label is assigned to the user request associated with the type of context. Based on the context label and the user request, a particular dialog system rule is selected from the plurality of dialog system rules. A response to the user request is generated by applying the dialog system rule to at least a part of the user request.As outlined above, the present technology provides for a platform enabling the creation and maintaining of custom Dialog System Engines serving as backend services for custom Dialog System Interfaces. A Dialog System Engine and Dialog System Interface, when interacting with each other, form a dialog system. One may refer to a Dialog System Interface running on or accessed from a client device as a “frontend” user interface, while a Dialog System Engine, which supports the operation of such a Dialog System Interface, can be referred to as a “backend” service. The present technology also provides for Dialog System Engines that are configured to accurately process user requests that are not generally understandable out of context.

Context-Based Natural Language Processing Takeaways

There are a number of technologies that could be used to power the Google Assistant, and one of the newest Natural Language Processing (NLP) approaches to receive a lot of attention at Google is the use of BERT, which supposedly powers featured snippets at Google. Given that one of the uses of Google Assistant is to supply answers to questions that searchers may have, it would be appropriate that Google would used BERT to power a lot of the user requests that might be made on the Assistant.

Understanding the contexts based upon speech and environment in responding to user requests may be powered by machine learning and algorithms designed to address both speech and environmental contexts. I’ve been experimenting with different features related to the Google Assistant on my phone to learn more about how it can be used.

In a related piece of news, Google recently announced that the “Top Stories” they might show in search results no longer are required to be Google News stories, from sites that are registered as Google News sites. Upon hearing this I reached out to Google’s Danny Sullivan on Twitter and asked him if Speakable Schema was still limited to Google News stories since it was released before becoming an official Schema by Google as a Beta only available to Google News sites.

His response to me:

Speakable is no longer restricted to news content; we'll be updating our documentation on this. However, using Speakable markup on any site isn't a guarantee that the Google Assistant will always use it. Speakable also remains a beta feature.

— Danny Sullivan (@dannysullivan) December 12, 2019

So, if you are answering questions on your website that you believe that people may ask about on a smart speaker or smartphone, you may consider using Speakable Schema markup. Danny warned that just because you use speakable schema, that is no guarantee that they will read out your answers in response to questions, but it does seem worth trying.

Search News Straight To Your Inbox

*Required

Join thousands of marketers to get the best search news in under 5 minutes. Get resources, tips and more with The Splash newsletter: