Table of Contents

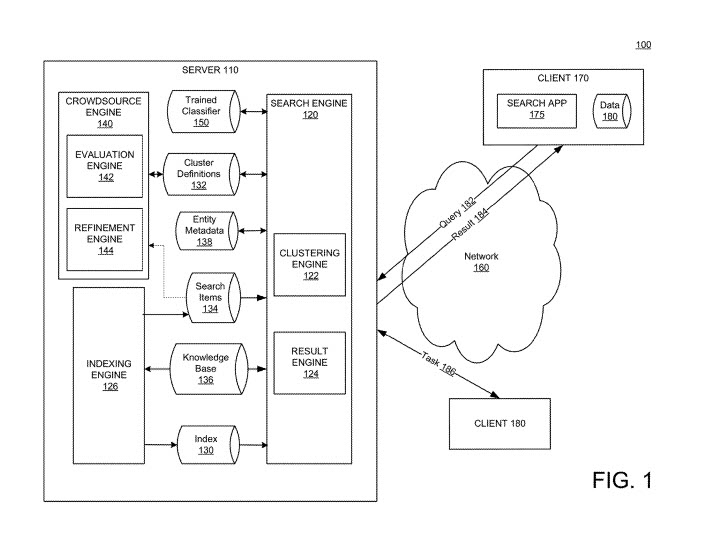

A Google Patent focusing upon ranking software applications using clustering and a crowdsourcing evaluation approach to choose the best-clustered search results was just granted. The crowdsourcing evaluation would be supplied by sources such as Mechanical Turk.

In addition to being used for software applications, this approach is also intended to apply to:

- Products sold in a marketplace

- Documents available over a network

- Songs in an online music store

- Images in a gallery

- Etc.

The Problem with Search Results Clustering

The reason why clustered search results would be used would be to address queries that may return a large number of responsive items. We are told that automated clustering, one that is algorithm-generated, doesn’t always produce high-quality clusters. In response, manual evaluation and refinement of clustering results by experts can increase the quality of search results but also can be slow and can not scale to large numbers of queries. That is the problem that this patent is intended to address.

Related Content:

Clustered Search Results as a Solution

The process behind this patent involves creating an improved system for crowdsourcing evaluation and refining clustered search results in a scalable manner.

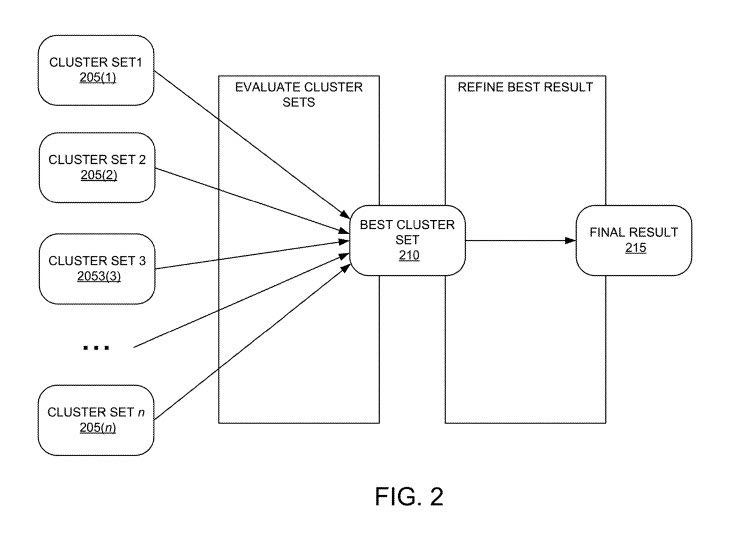

It begins with the system generating cluster sets for a query with the use of a variety of clustering algorithms.

Those clustered sets would then be presented to a set of crowdsourcing workers, in random order.

The workers would interact with a user interface that presents the clustered sets of results to the workers, to receive evaluations from those workers for each cluster set independently of the other sets. Each evaluation focuses on the quality of each cluster rather than comparing clusters to each other.

A score is generated for each cluster based on the evaluation for that custer, including:

- Ratings given

- The time spent providing the ratings

- Additional information accessed

- Etc.The score may be used to determine, based on the score across several worker responses, which clustering algorithm produced the best set of clusters for the query.

These are not the raters who evaluate search results using Google’s Quality Rater’s Guidelines. I have never seen those human evaluators o through a task such as rating search results clusters.

Refinement of Search Results Clustering

In addition to rating search result clusters, the patent also tells us that these crowdsourced workers could suggest changes and refinements to the cluster that has been determined to be the best. During the crowdsourced evaluation, the workers may suggest changes according to a series of refinement tasks.

Refinement tasks can include:

- Merging two clusters that are too similar

- Deleting a cluster that doesn’t seem to fit with the others

- Deleting an entity/topic from a cluster

- Deleting a particular search item from a cluster

- Moving an entity or search item from one cluster to another cluster

We are also told that:

If the suggested refinement meets an agreement threshold for the tasks, the system may automatically make the refinement by changing the cluster definition and/or may report the refinement to an expert.

Cluster Set Testing

Each of the cluster sets may represent a different clustering algorithm. A set is sent to crowdsourced workers at random, and they rate that cluster. Those ratings are combined to generate scores for those clusters.

The method also includes storing a cluster set definition for the cluster set with the highest cluster set score, the cluster set definition is associated with the query and using, after receiving a request for the query, the cluster set definition to initiate display of search items responsive to the query.

Advantages of This Search Results Clustering Approach

These implementations may be used to realize one or more of the following advantages.

- The system provides a way to determine which clustering algorithm produces best-clustered query results for individual queries. This provides a better user experience for users who view the results

- The evaluation and rating are scalable (e.g., can handle hundreds or thousands of queries) because it relies on crowdsource tasks and not experts

- The system maximizes quality by down-weighting ratings from crowdsourcing workers who do not spend sufficient time on the task and/or who do not have sufficient expertise (e.g., familiarity with the query and the search items)

- The system also maximizes quality by presenting the different cluster sets randomly to different workers to avoid a bias of the worker to spend more time on the first set presented

- By asking the worker to evaluate each cluster before evaluating the overall cluster set, the system encourages the evaluation of each cluster

- The system provides a maximum number of high quality or important search items for each cluster to assist the crowdsource worker in evaluating redundancy between clusters in a cluster set

- The system facilitates consensus on the refinement of cluster sets, such as merging two clusters in the cluster set, deleting a cluster from the cluster set, or deleting specific topics or search items from a cluster, and may automatically make changes to the cluster set definition when a minimum number of workers recommend the same refinement

The Search Result Clustering Patent can be found at:

Crowdsourced evaluation and refinement of search clusters

Inventors: Jilin Chen, Amy Xian Zhang; Sagar Jain, Lichan Hong, and Ed Huai-Hsin Chi

Assignee: GOOGLE LLC

US Patent: 10,331,681

Granted: June 25, 2019

Filed: April 11, 2016Abstract

Implementations provide an improved system for presenting search results based on entity associations of the search items. An example method includes, for each of a plurality of crowdsource workers, initiating display of a first randomly selected cluster set from a plurality of cluster sets to the crowdsource worker. Each cluster set represents a different clustering algorithm applied to a set of search items responsive to a query. The method also includes receiving cluster ratings for the first cluster set from the crowdsource worker and calculating a cluster set score for the first cluster set based on the cluster ratings. This is repeated for the remaining cluster sets in the plurality of cluster sets. The method also includes storing a cluster set definition for a highest scoring cluster set, associating the cluster set definition with the query, and using the definition to display search items responsive to the query.

Crowdsourcing Evaluation TakeAways

I found this patent interesting because of the use of human evaluators who weren’t ranking search results, but instead were rating and refining the best clusters of search results. The clusters are based on different clustering algorithms, and the patent doesn’t tell us much about how those clustering algorithms might work. The place where you may have seen clustering in search results at Google has been Google News, where news articles are grouped by topics and geography, and the most representative results are usually the highest-ranking within each of those clusters.

Instead of subject matter experts rating clusters, a crowdsourcing evaluation approach is used. It likely saves time, and the evaluators can rate and refine a lot of clusters of search results. This has me wondering how web pages might be able to stand out as being representative of clusters.

DETAILED [Home]

Search News Straight To Your Inbox

*Required

Join thousands of marketers to get the best search news in under 5 minutes. Get resources, tips and more with The Splash newsletter: