Table of Contents

If you’re working on an eCommerce site, the robots.txt file is one of the biggest foundational elements of your site’s SEO. Ecommerce sites are generally much bigger than most sites on the Web and also contain features such as faceted navigation that can exponentially increase the size of the site. This means that these sites need to be able to more tightly control how Google crawls their site. This helps these sites manage crawl budget and prevent low quality pages from getting crawled by Googlebot.

Related Content:

However, when it comes to Shopify, the robots.txt has long been a gripe of the SEO community. For many years, one of the biggest frustrations for Shopify SEO has been a lack of control of the robots.txt. This made the platform more difficult to work with as compared to others such as SEO for Magento, where users have always been able to easily edit the robots.txt. While the default robots.txt does a great job of blocking crawlers, some sites require adjustments to this file. As more sites start to use the platform, we’re now seeing sites using Shopify get larger and more robust, requiring more crawl intervention by using the robots.txt.

Fortunately, Shopify has been doing a great job of improving the experience of their platform. As of June 2021, Shopify announced that you will now be able to customize the robots.txt file for your site:

User-agent: everyone

Allow: /

Starting today, you have complete control over how search engine bots see your store. #shopifyseohttps://t.co/Hz9Ijj5h1y— Tobi Lutke (@tobi) June 18, 2021

This is huge news for SEOs and Shopify store owners who have been begging for years to adjust the file. It also shows that Shopify listens to the feedback that SEOs give them and are taking steps to improve the platform from a search standpoint.

So now that we know you can edit the file, let’s talk about how to make those adjustments and situations where you might consider doing so.

What Is The Shopify Robots.txt?

The Shopify robots.txt is a file that instructs search engines as to what URLs they can crawl on your site. Most commonly, the robots.txt file can block search engines from finding low quality pages that shouldn’t be crawled. The Shopify robots.txt is generated by using a file called robots.txt.liquid.

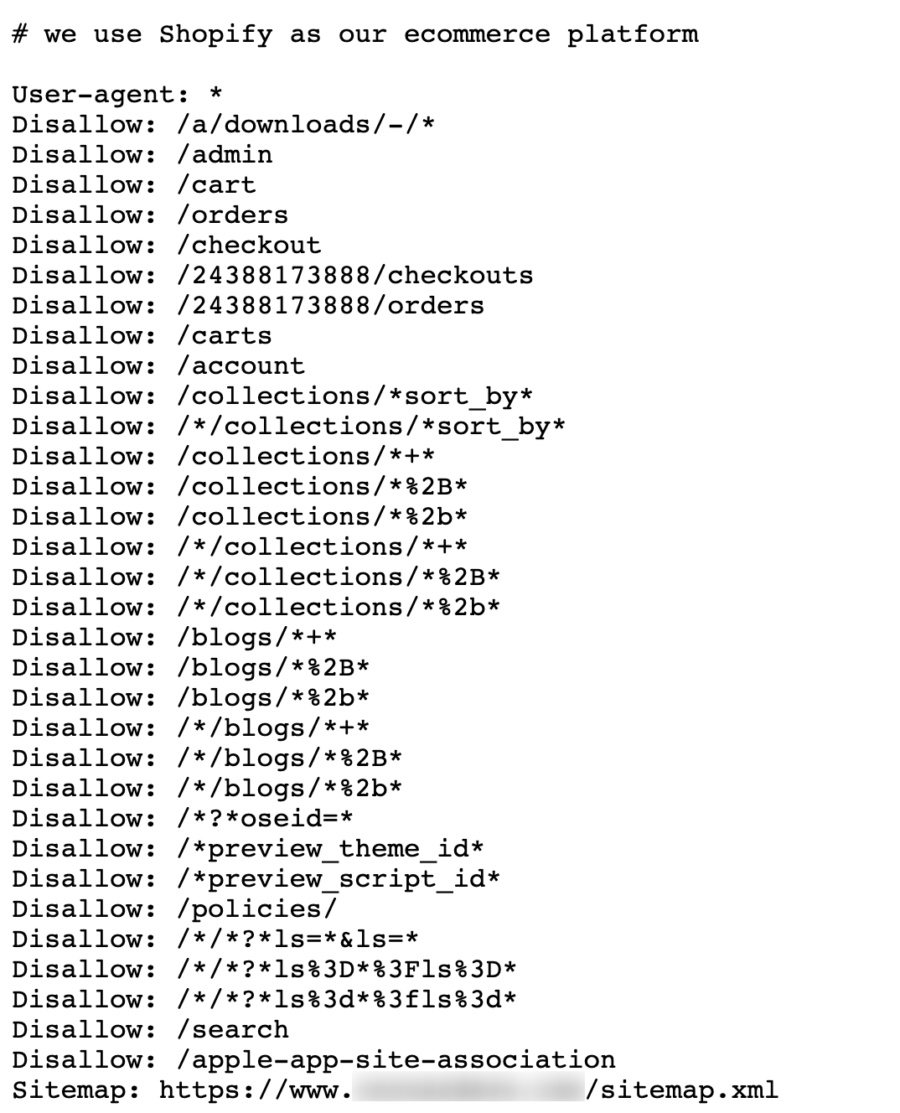

What Does Shopify’s Default Robots.txt Block?

When looking at your out of the box Shopify site, you might notice that a robots.txt file is already configured. You can find this file by navigating to:

domain.com/robots.txt

In this robots.txt file, you’ll see that there are already a good number of preconfigured rules.

The vast majority of these rules are useful to keep search engines from crawling unnecessary pages. Below are some of the most important rules in the default Shopify robots.txt file:

- Disallow: /search – Blocks internal site search

- Disallow: /cart – Blocks the Shopping Cart page

- Disallow: /checkout – Blocks the Checkout page

- Disallow: /account – Blocks the account page

- Disallow: /collections/*+* – Blocks duplicate category pages generated by the faceted navigation

- Sitemap: [Sitemap Links] – References the sitemap.xml link

Overall, Shopify’s default rules do a pretty good job of blocking the crawl of lower quality Web pages for most sites. In fact, it’s likely that the majority of Shopify store owners don’t need to make any adjustments to their robots.txt file. The default configuration should be enough to handle most cases. Most Shopify sites generally tend to be smaller in size and crawl control isn’t a huge issue for many of them.

Of course, as more and more sites adopt the Shopify platform, this means that the websites are getting larger and larger. As well, we’re seeing more sites with custom configurations where the default robots.txt rules aren’t enough.

While Shopify’s existing rules do a good job of accounting for most cases, sometimes store owners might need to create additional rules in order to tailor the robots.txt to their site. This can be done by creating and editing a robots.txt.liquid file.

How Do You Create The Shopify Robots.txt.liquid?

You can create the Shopify robots.txt.liquid file by performing the following steps in your store:

- In the left sidebar of your Shopify admin page, navigate to Online Store > Themes

- Select Actions > Edit code

- Under “Templates”, click the “Add a new template” link

- Click the left-most dropdown and choose “robots.txt”

- Select “Create template”

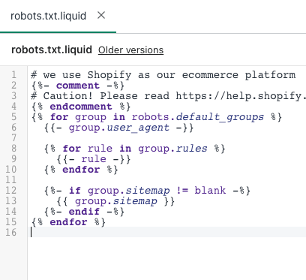

You should then see the Shopify robots.txt.liquid file open in the editor:

How Do You Edit The Shopify Robots.txt File?

Adding A Rule

If you want to add a rule to the Shopify robots.txt, you can do so by adding additional blocks of code to the robots.txt.liquid file.

{%- if group.user_agent.value == ‘*’ -%}

{{ ‘Disallow: [URLPath]‘ }}

{%- endif -%}

For instance, if your Shopify site uses /search-results/ for the internal search function and you want to block it with the robots.txt, you could add the following command:

{%- if group.user_agent.value == ‘*’ -%}

{{ ‘Disallow: /search-results/.*’ }}

{%- endif -%}

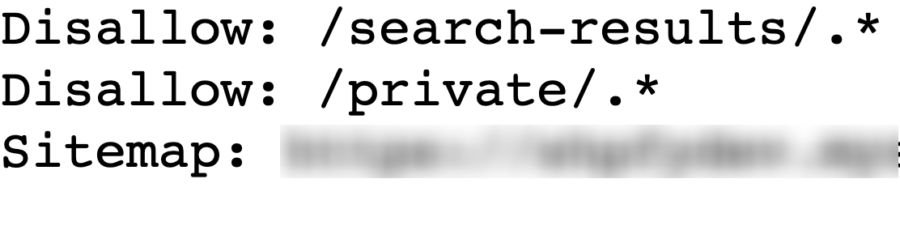

If you wanted to block the multiples directories (/search-results/ & /private/) you would add the following two blocks to the file:

{%- if group.user_agent.value == ‘*’ -%}

{{ ‘Disallow: /search-results/.*’ }}

{%- endif -%}

{%- if group.user_agent.value == ‘*’ -%}

{{ ‘Disallow: /private/.*’ }}

{%- endif -%}

This should allow these lines to populate in your Shopify robots.txt file:

Potential Use Cases For A Shopify Robots.txt File

So knowing that the standard robots.txt is generally sufficient for most sites, in what situations would your site benefit from editing Shopify’s robots.txt.liquid file? Below are some of the more common situations when you might want to consider adjusting yours:

Internal Site Search

A general best practice for SEO is to block a site’s internal search via the robots.txt. This is because there are an infinite number of queries that users could enter into a search bar. If Google is able to start crawling these pages, it could lead to a lot of low quality search result pages appearing in the index.

Fortunately, Shopify’s default robots.txt blocks the standard internal search with the following command:

Disallow: /search

However, many Shopify sites don’t use Shopify’s default internal search. We find that many Shopify sites end up using apps or other internal search technologies. This frequently changes the URL of the internal search. When this happens, your site is no longer protected by Shopify’s default rules.

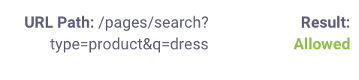

For instance, on this site the internal search results render at URLs with /pages/search in the path:

This means that these internal search URLs are allowed to be crawled by Google:

This website might want to consider editing Shopify’s robots.txt rules to add custom commands that block Google from crawling the /pages/search directory.

Faceted Navigations

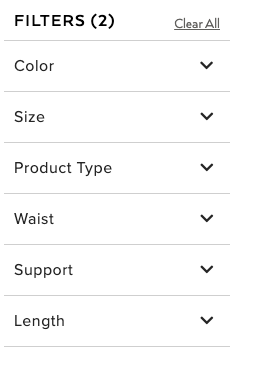

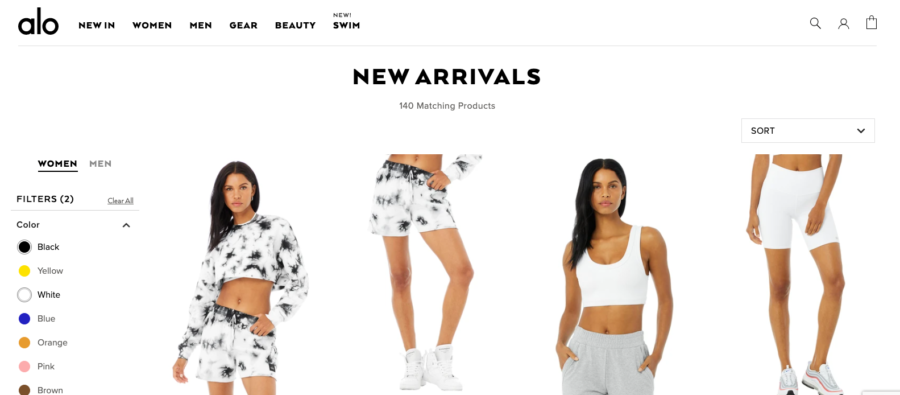

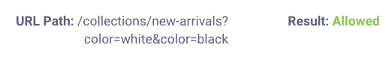

If your site has a faceted navigation, you might want to consider adjusting your Shopify robots.txt file. Faceted navigation are the filtering options you can apply on category pages. They’re generally found on the left-hand side of the page. For example, this Shopify site let’s users filter products by color, size, product type, and more:

When we select the “Black” and “Yellow” color filters, we can see that a URL with the “?color” parameter is loaded:

![]()

While Shopify’s default robots.txt does a good job of blocking page paths that a faceted navigation might create, unfortunately it can’t account for every single use case. In this instance, “color” is not blocked which will allow Google to crawl the page.

This might be another instance, where we might want to consider blocking pages with the robots.txt in Shopify. Since a large number of these faceted navigation URLs could be crawled, we might want to consider blocking many of them to reduce the crawl of lower quality/similar pages. This site could determine all of the parameters in the faceted navigation that they would like to block (size, color) and then create rules in the robots.txt to block their crawl.

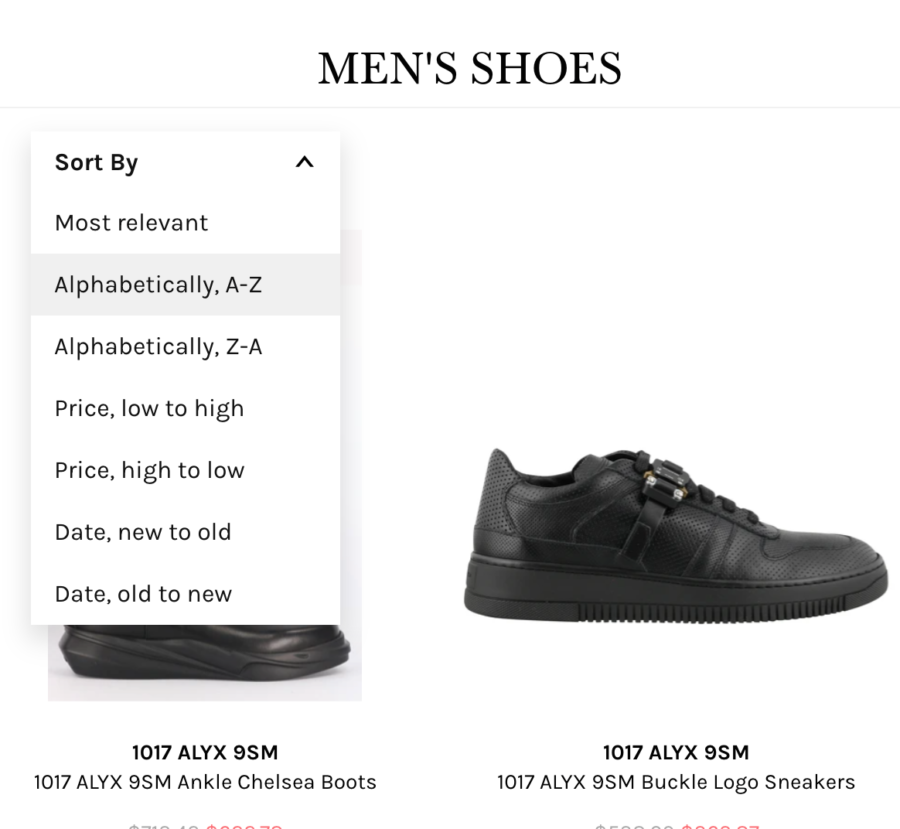

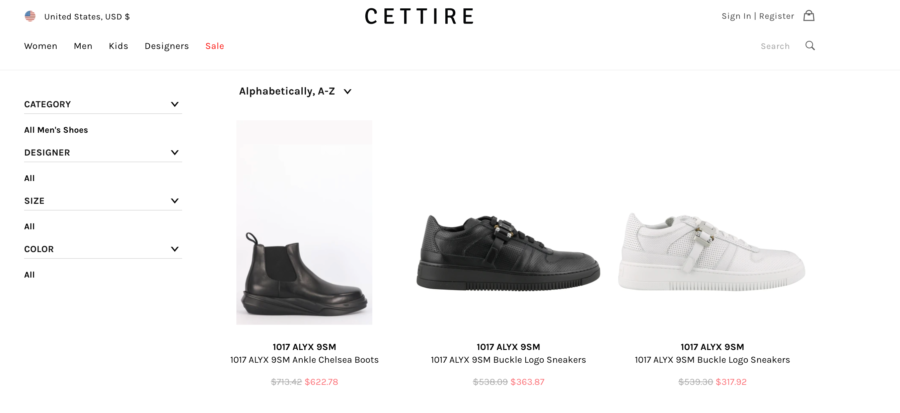

Sorting Navigation

Similar to faceted navigation functionalities, many eCommerce sites include sorting on their category pages. These pages let users see the products offered on category pages in a different order (Price: low to high, Most relevant, Alphabetically, etc).

The issue this creates is that these pages contain duplicate/similar content as they are simply variations of the original category page but with the products in a different order. Below you can see how when selecting “Alphabetically, A-Z”, a parameterized URL is created that sorts products alphabetically. This URL uses the “?q” parameter appended to the end of it:

![]()

Of course this isn’t a unique URL that should be crawled and indexed as it’s simply the same products as the original category page sorted in a different order. This Shopify site might want to consider adding a robots.txt rule that blocks the crawl of all “?q” URLs.

Of course this isn’t a unique URL that should be crawled and indexed as it’s simply the same products as the original category page sorted in a different order. This Shopify site might want to consider adding a robots.txt rule that blocks the crawl of all “?q” URLs.

Conclusion

Shopify’s robots.txt.liquid file allows SEOs to have much greater control over the crawl of their site then they once did before. While for most sites Shopify’s default robots.txt should be sufficient at keeping search engines out of undesirable areas, you might want to consider adjustments to it if you notice that an edge case applies to you. Generally, the larger your store is and the more customization you’ve done to it, the more likely it is you’ll want to make adjustments to the robots.txt file. If you have any questions about the robots.txt or Shopify SEO agency services, feel free to reach out!

Other Shopify SEO Resources

- Shopify Speed Optimization

- Improving Shopify Duplicate Content

- Shopify Sitemap.xml Guide

- Shopify Structured Data

- Shopify SEO Tools

- Shopify Plus SEO

- All Shopify SEO Articles

Search News Straight To Your Inbox

*Required

Join thousands of marketers to get the best search news in under 5 minutes. Get resources, tips and more with The Splash newsletter: