Home / Blog / What are Good Answers for Queries that Ask Questions?

What are Good Answers for Queries that Ask Questions?

Published: March 23, 2020

Share on LinkedIn Share on Twitter Share on Facebook Click to print Click to copy url

Contents Overview

Elements of Answer-Seeking Queries and Elements of Answers

Google was recently granted a patent focusing on answer-seeking queries and providing good answers to such queries.

Having an idea of what the elements of answer-seeking queries are can help people understand what they might need to publish to provide answers to those queries.

Related Content:

So, it can be interesting seeing what this patent says about what Google may be looking for in terms of good answers to queries.

This patent focuses on:

How a search system can learn the characteristic elements of answer-seeking queries and answers to answer-seeking queries.

The patent’s description starts by telling us more about good answers to queries:

In general, a search system receives a search query and obtains search results that satisfy the search query. The search results identify resources that are relevant or responsive to the search query, e.g., Internet-accessible resources. A search system can identify many different types of search results in response to a received search query, e.g., search results that identify web pages, images, videos, books, or news articles, search results that present driving directions, in addition to many other types of search results.

This can include Google being aware of information about entities in those queries and using that information in an answer:

Search systems may make use of various subsystems to obtain resources relevant to a query. For example, a search system can maintain a knowledge base that stores information about various entities and provide information about the entities when a search query references the alias of an entity. The system can assign one or more text string aliases to each entity. For example, the Statue of Liberty can be associated with aliases “the Statue of Liberty” and “Lady Liberty.” Aliases need not be unique among entities. For example, “jaguar” can be an alias both for an animal and for a car manufacturer.

It can also involve Google understanding different parts of speech in the queries, and using that information in answers too:

Another example search subsystem is a part-of-speech tagger. The part-of-speech tagger analyzes terms in a query and classifies each term as a particular part of speech, e.g., a noun, verb, or direct object. Another example search subsystem is a root word identifier. Given a particular query, the root wood identifier can classify a term in the query as a root word, which is a word that does not depend on any other words in the query. For example, in the query “how to cook lasagna,” a root word identifier can determine that “cook” is the root word of the query.

Above all, this patent is specifically about finding concise answers to queries focused on showing answers to searcher’s questions:

This specification describes technologies relating to classifying queries as answer-seeking and generating answers to answer-seeking queries. An answer-seeking query is a query issued by a user who seeks a concise answer. For example, “when was George Washington born” would be classified by a system as an answer-seeking query because the system can determine that it is likely that a user who issues it seeks a concise answer, e.g., “Feb. 22, 1732.”

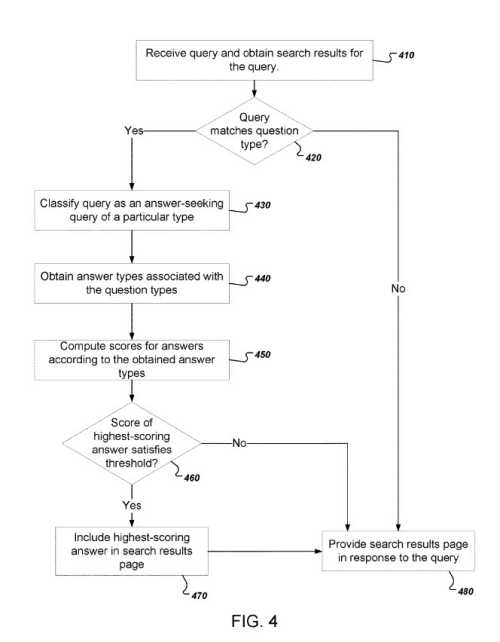

An example answer seeking query, from the patent’s drawings:

Classifying queries as answer-seeking and generating answers to answer-seeking queries

An answer-seeking query is one sought by a searcher who is looking for a concise answer.

One example is, “When was George Washington born?” That could be classified as an answer-seeking query because it can be determined that it is likely a searcher wants an answer such as: “Feb. 22, 1732.”

The patent tells us that not all queries are answer-seeking and that those may only return search results and not answer boxes with answers.

For some queries, the best answer might seem to be providing a searcher with a sorted list of multiple documents relevant to the query. Someone searches for “restaurants in new york” and they likely want a list of documents about different places to eat in NYC.

We are pointed to the purpose behind this patent, and are told about why it exists:

The techniques described below relate both to how a system can classify a query as an answer-seeking query and how a system can identify portions of responsive documents that are likely to be good answers to an answer-seeking query.

This is the first time I have seen anything from Google that tells us what a “good answer” to a question might be in an answer box.

Identifying Answer Seeking Queries

The patent description tells us what the process behind recognizing answer-seeking queries might be.

It begins with a summary of aspects of the patent and lays out aspects of how it works, that I go into more detail about further in this post.

The answer-seeking query identification process includes:

- Receiving a query that has multiple terms

- Classifying a query as an answer-seeking query of a particular question type

- Obtaining one or more answer types associated with the particular question type

- Where each answer type specifies one or more respective answer elements representing characteristics of a proper answer to the answer-seeking query

- Obtaining search results satisfying the query, where each identifies a document

- Computing a respective score for each of one or more passages of text in each document identified by the search results

- Where the score for each passage of text is based on how many of the one or more answer types match the passage of text

- Providing, in response to the query, a presentation that includes information from one or more of the passages of text selected based on the respective score

Some other optional features involved in this process:

- Providing a first passage of text and one or more search results satisfying the query

- Determining that the passages of text having scores that satisfy a threshold

- Selecting the passages of text having scores that satisfy the threshold for inclusion in the presentation

What are Answer-Seeking Queries?

Classifying queries as “answer-seeking queries” of particular types can mean:

- Matching terms of queries against a number of question types

- Where each question type specify a number of question elements that collectively represent characteristics of a corresponding type of query

- Determining that terms of queries match a first question type of the number of question types

How do Terms of Queries Match Question types?

An “n-gram” means a sequence of words of “n” length, so a 2-gram would be two words long, and a 3-gram would be 3 words long. by phrasing this as an “n-gram” the process in the patent provides the flexibility to explore different lengths.

Determining that terms of queries match particular question types mean:

- Determining that first n-gram in the query represent entity instance

- Determining that first question types include question elements representing entity instances

Determining that terms of queries match particular question types mean:

- Deciding that the first n-gram in a query represents an instance of a class

- Determining that the question type includes a question element representing the class

Determining that the first passage of text matches a first answer type of the one or more answer types

- Deciding that the first passage of text has n-grams that match one or more answer elements of the first answer type

- A first answer element of the one or more answer elements may represent a numerical measurement

Where determining that the first passage of text matches the first answer type comprises determining that the first passage of text has an n-gram that represents a numerical measurement.

- The first answer element of the one or more answer elements represents a verb class

- Determining that the first passage of text matches the first answer type comprises determining that the first passage of text has an n-gram that represents an instance of the verb class

This patent can be found at:

Generating elements of answer-seeking queries and elements of answers

Inventors: Yi Liu, Preyas Popat, Nitin Gupta, and Afroz Mohiuddin

Assignee: Google LLC

US Patent: 10,592,540

Granted: March 17, 2020

Filed: June 28, 2016

Abstract

Methods, systems, and apparatus, including computer programs encoded on computer storage media, for generating answers to answer-seeking queries.

One of the methods includes receiving a query having multiple terms. The query is classified as an answer-seeking query of a particular question type, and one or more answer types associated with the particular question type are obtained.

Search results satisfying the query are obtained, and a respective score is computed for each of one or more passages of text occurring in each document identified by the search results, wherein the score for each passage of text is based on how many of the one or more answer types match the passage of text.

A presentation that includes information from one or more of the passages of text selected based on the respective score is provided in response to the query.

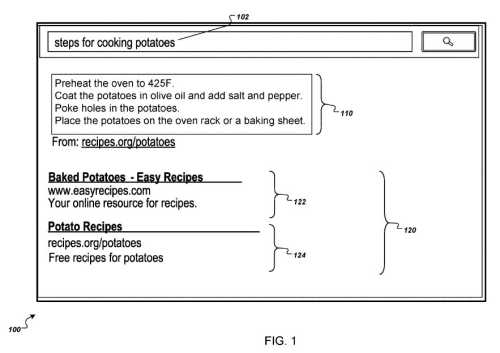

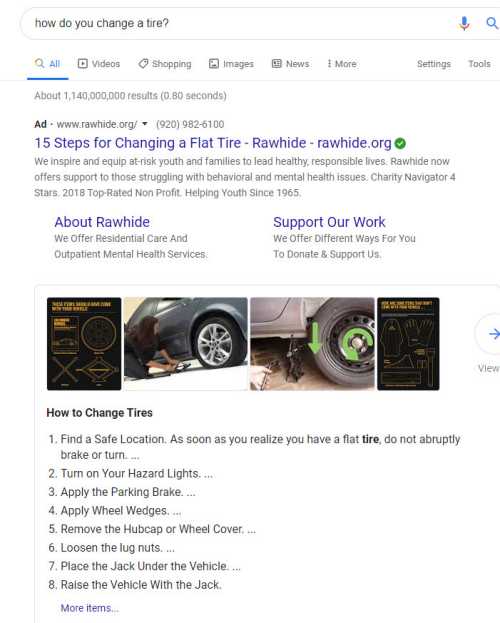

Presentation of an answer for an answer-seeking query

A search results page may include web search results as well as an answer box.

I wrote more about answer boxes in the post, How Google May Trigger Answer Box Results for Queries.

Web search results can provide links to documents from Google’s index of the web.

They are results that are considered to be likely relevant to a query asked and include a title, a snippet, and a display link.

Those can be viewed in Search Results to give a searcher an idea of how relevant a particular link might be to the query they performed. And they would enable a searcher to visit the page that they are from.

An answer box can directly include an answer to a query. That answer is likely to be obtained from the text of a document referenced in the web search results.

I wrote about such answers in the post, Featured Snippets – Natural Language Search Results for Intent Queries. Those are likely from authoritative documents likely from the first page of a set of search results.

Google had been showing these answer box results above organic results in response to a query and show that page a second time in SERPs, but they decided to treat such answers as single results recently, as described at The Search Engine Journal in Google: Webpages with Featured Snippets Won’t Appear Twice on Page 1

This patent tells us that Google may “provide the answer box whenever the system decides that the query is an answer-seeking query.”

There are a few different ways that Google may consider a query to be an answer-seeking query, based upon whether it uses terms that match a specific question type.

These questions may trigger an answer box by including question terms such as, “how,” “why,” etc.

This patent tells us that those question terms aren’t necessary all of the time, and an answer box could be shown even when a query is not phrased as a question and does not include a question word.

But, when a query is something such as “How to cook a potato?” or “How to make French Fries?” or “How to make Mashed Potatoes?” it is likely looking for an answer box.

But those question terms and actual questions do not need to be present to trigger an answer box. Google may look at queries and decide if they are best answered by an answer type:

Rather, the answer in the answer box is identified as a good answer because the search system has determined that the question type matching the query is often associated with an answer type that matches the text of the document referenced by the search result.

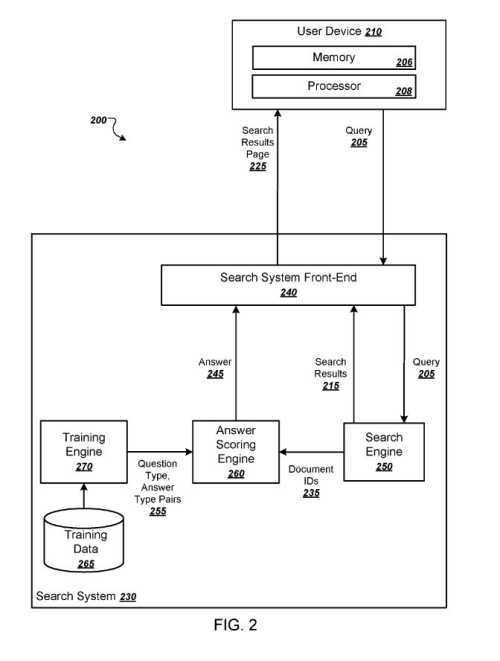

An Answer Scoring Engine

When someone performs a search. Their query results in documents being returned in response to that query.

An Answer scoring engine receives document IDs and may generate answers that could be included in the search results page.

Those document IDs will identify a subset of documents referenced by the search results.

An answer scoring engine may generate an answer by using question type/answer type pairs received from a training engine. (These could be the question and answer shown in an answer box.)

The answer scoring engine can identify for the query one or more question types matching the terms of the query, and for each question type, one or more answer types associated with the question element.

Each question type specifies one or more question elements that are characteristic of an answer-seeking query.

Similarly, each corresponding answer type specifies one or more answer elements that are characteristic of an answer to an answer-seeking query.

Question types and answer types will be described in more detail below concerning FIG. 3.

The training engine identifies pairs of question types and answers types.

The training engine processes training examples in a collection of training data, which can include pairs of questions and answers to the questions.

A question and an answer may be chosen to respond to the query, such as the following on a query about how to change a tire:

Google has been providing information about specific types of questions such as how-to questions which I wrote about in more detail recently in the post, How Google May Choose Answers to How-to Queries.

The patent I wrote about in that post focused upon trying to find confidence in the steps that might answer such a query, rather than this one which is more about deciding whether a query is an answer-seeking query and whether an answer provides a good response to that query.

Generating question element/answer element pairs

This search system will process question/answer pairs in training data to define question types and corresponding answer types.

It will compute statistics that represent which question type/answer type pairs are most likely to generate good answers for answer-seeking queries.

That determination takes place on a computer system referred to as a training engine.

It begins by identifying training data.

Training data is data that associates questions with answers, such as question and answer pairs.

Training data can include queries determined to be answer-seeking and snippets of search results selected by searchers, either in general or selected more frequently than other search results.

In this training data, the system may filter certain types of words and phrases out of questions, such as stop words.

So, “how to cook lasagna” may be filtered to generate “how cook lasagna.”

Some parts may be removed from a question, such as adjectives and prepositional phrases being removed from a query.

So a query such as “where is the esophagus located in the human body” may be filtered to generate “where is esophagus located.”

The system can also transform terms in the questions and answers into canonical forms.

This means that inflected forms of the term “cook,” e.g., “cooking,” “cooked,” “cooks,” and so on, maybe transformed into the canonical form “cook.”

Question types may be defined from question elements in the training data.

A question type is a group of question elements that taken together represents the characteristics of an answer-seeking query.

The question type (how, cook) specifies two question elements, “how,” and “cook.”

A query matches this question type when it has terms that matching all of the question elements in the question type.

The query “how to cook pizza” matches the question type (how, cook) because the query includes all question elements of the question type.

The patent tells us that question types can be ordered or unordered. (This appears to be an indication of whether a query is a “how to” query showing specific steps to follow in a certain order.)

The patent uses a curly bracket to identify whether a query uses an ordered structure.

So, a query will match the question type {how, cook} if and only if the term “how” occurs in the query before the term “cook.”

A Question and an Answer pair match up by looking at, for each question, which terms of the question match any of set of question element types.

Each question element type represents a characteristic of an n-gram occurring in a question.

Common Question Element Types

The Patent provides some common question element types which include:

Entity instance–The entity instance type matches n-grams that represent entity instances. As an example, the n-gram “Abraham Lincoln” matches a question element type because this n-gram is an instance of an entity. When an n-gram matches this question element type, the resulting question type includes a question element representing the entity instance of the n-gram, e.g., (entity/Abraham_Lincoln). Other n-grams that match any aliases of the same entity will also match this question element, e.g., “Abe Lincoln,” “President Lincoln,” and “Honest Abe.”

Entity class–The entity class type matches n-grams that represent instances of entity classes. As an example, the n-gram “lasagna” matches this question element type because it is an instance of an entity class representing food dishes. When an n-gram matches this question element type, a resulting question type includes a question element representing the entity class, e.g., (entity/dishes)

Part of speech class–The part-of-speech class type matches n-grams that represent instances of part-of-speech classes. For example, the n-gram “run” matches this question element type because it is an instance of a part-of-speech class “verbs.” When an n-gram matches this question element type, the resulting question type includes a question element representing the matching part-of-speech class, e.g., (part-of-speech/verb).

Root word–The root word type matches n-grams that the system determines to be the root word of a question. In general, a root word is a term that does not depend on other terms in the question. For example, in “how to cook lasagna,” “cook” is the root word. Thus, “cook” would match this question element type when “cook” occurs in the query “how to cook lasagna.” The resulting question type includes the matching n-gram, e.g., (cook).

N-gram–The n-gram type matches any n-gram. However, the patent tells us that to avoid the overly voluminous generation of question types from the training data, the system can restrict n-gram question elements to a predefined set of n-grams.

A search system may predefine n-gram question elements to include question words and phrases, such as, “how,” “how to,” “when,” “when was,” “why,” “where,” “what,” “who”, and “whom.”

More than one of these question element types may appear.

So, the n-gram “George Washington” matches both the entity instance type, resulting in the question element entities/George_Washington, as well as the entity class type, resulting in the question element entities/us_presidents.

The n-gram “George Washington” may also match the n-gram type depending on how the system limits the number of n-gram types.

Also, the term “cook” matches the root word type, the entity instance type, and the entity class type.

An Example of Broadening Questions and Answer Types

After this system identifies matching question element types, It can then generate question types by generating different combinations of question elements at varying lengths and multiple levels of generality.

This can allow the discovery of question types that provide a good balance between generality and specificity.

For example, “how to cook lasagna.”

The first term “how” matches only the n-gram element type.

But, “cook” matches the n-gram element type, the root word element type, and the entity class element type for the class “hobbies.”

Thus, the system can generate the following two-element question types by selecting different combinations of matching question elements:

(how, cook)

(how, entity/hobbies)

The term “lasagna” matches the n-gram element type and the entity class element type “dishes.” Thus, the system can generate the following three-element question types by selecting different combinations of matching question elements:

(how, cook, lasagna)

(how, cook, entity/dishes)

(how, entity/hobbies, entity/dishes)

(how, entity/hobbies, lasagna)

Choosing Answer Element Types

The patent defines an “answer type” as a group of answer elements that collectively represent the characteristics of a proper answer to an answer-seeking query.

This answer-seeking queries approach may generate answer types by processing those answers in the training data and deciding, for each answer, which terms of the answer match any of a set of answer element types.

The search system may generate answer types by finding answer elements that fit with the answer element types.

Some common answer element types, and their corresponding answer elements, include:

Measurement–The measure type may match terms representing numerical measurements. These can include:

- Dates, e.g., “1997,” “Feb. 2, 1997,” or “2/19/1997”

- Physical measurements, e.g., “1.85 cm,” “12 inches”

- Time durations, “10 minutes,” “1 hour”

- Any other appropriate numerical measurement

N-gram–The n-gram type matches any n-gram in an answer. To avoid the overly voluminous generation of answer types, the system may restrict n-gram answer elements to n-grams below a certain value of n and that is not common. For example, the system can restrict n-gram answer elements to 1-grams and 2-grams having an inverse document frequency score that satisfies a threshold.

Verb–The verb type matches any terms that the system determines to be verbs.

Preposition–The preposition types matches any terms that the system determines to be prepositions.

We are told that a system can define answer element types for any part-of-speech.

But, in some implementations, the system may use only verb and preposition types.

Entity_instance–The entity instance type matches n-grams that represent entity instances.

An answer type may include an answer element representing an entity instance, e.g., (entity/Abraham_Lincoln).

N-gram near entity–The n-gram-near-entity type uses both the n-gram answer element type and the entity instance answer element type and also imposes a restriction that the n-gram occur near the entity instance in an answer. The system can consider an n-gram to be near an entity instance when the n-gram:

- Occurs in the answer within a threshold number of terms of the entity instance

- Occurs in the same sentence as the entity instance

- Occurs in the same passage as the entity instance

For example, in the answer “Obama was born in Honolulu,” the uncommon n-gram “Honolulu” occurs within five terms of the entity instance “Obama. The resulting answer type includes the n-gram and the entity instance, e.g., (entity/Obama near Honolulu)

Verb near entity–the verb-near-entity type uses both the verb answer element type and the entity instance answer element type and similarly imposes a restriction that the verb occurs near the entity instance in an answer. For example, for “Obama was born in Honolulu,” the resulting answer type can include the answer element (entity/Obama near born).

Preposition near entity–The preposition-near-entity type uses both the preposition answer element type and the entity instance answer element type and similarly imposes a restriction that the preposition occur near the entity instance in the answer. For example, for “Obama was born in Honolulu,” the resulting answer type can include the answer element (entity/Obama near in)

Verb class–The verb class type matches n-grams that represent instances of verb classes. For example, the system can identify all of the following verbs as instances of the class verb/blend: add, blend, combine, commingle, connect, cream, fuse, join, link, merge, mingle, mix, network, pool. The resulting answer type includes a question element representing the verb class, e.g., (verb/blend).

Skip grams–The skip-gram type specifies a bigram, as well as a number of terms, occur between the terms of the bigram. For example, if the skip value is 1, the skip-gram “where * the” matches all of the following n-grams: “where is the,” “where was the,” “where does the,” and “where has the.” The resulting answer type includes an answer element representing the bigram and the skip value, e.g., (where * the), where the single asterisk represents a skip value of 1.

The system computes counts for question type/answer type pairs.

Scoring Question and Answer Pairs

The patent tells us that the system will compute a score for each question type/answer type pair.

This score is based on “the predictive quality of a particular question type/answer type pair as reflected by the training data.”

A question type/answer type pair with a good score is likely to have an answer type with one or more answer elements that collectively represent characteristics of a proper answer to an answer-seeking query represented by the question type.

The system will typically wait until all the counts have been computed before computing a score for a particular question type/answer type pair.

In some implementations, the system computes a point-wise mutual information (PMI) score for each pair. A PMI score of zero indicates that the question and answer are independent and have no relation. A high score, on the other hand, represents a higher likelihood of finding the answer type matching answers to questions matching the corresponding question type.

The system selects question type/answer type pairs with the best scores. Those may be ranked and pairs can be selected having a score that satisfies a threshold.

Answer types of the selected pairs are likely to represent proper answers to answer-seeking queries represented by the corresponding question types.

This answer-seeking query system may then index the selected question type/answer type pairs by question type so that the system can efficiently obtain all answer types associated with a particular question type during online scoring.

It may sort the question type index by scores to make real-time decisions about how many answer types to try.

This system may sort each answer type associated with a question type by score so that the answer types having the highest scores can then be processed first at query time.

Process for Generating Answers for Answer-Seeking Queries

This patent reminded me of another patent I have written about in the past involving answering questions. That patent was one I wrote about in the post, Does Google Use Schema to Write Answer Passages for Featured Snippets?

That post tells us about how Google might choose between well written textual answers to questions that also have structured data associated with them to provide additional facts to those answers, but didn’t provide the analysis that this patent does by looking at elements of answer-seeking queries and elements of those answers.

The description in this patent ends by providing more details about how answers are generated specifically for answer-seeking queries.

It begins by receiving a query and obtains search results for it

Then determining a question type matching the query.

If the query does not match any of the generated question types, the system can determine that the query is not an answer-seeking query.

If so, it responds without an answer box, showing the search results page without an answer.

The type of the answer-seeking query is defined by the elements of the matching question type. The search system may then decide upon passages of text likely to be good answers to the answer-seeking query.

To do this, it may access a question type index that associates each matching question type with one or more answer types.

The search system may compute scores for answers according to the obtained answer types from a search.

The search system may determine whether the score of the highest-scoring answer satisfies a threshold. If the score does not satisfy a threshold, the system can decide that the answer is not a good answer to the query and can decline to show that answer on the search results page.

If the score does satisfy the threshold, the search system may include the highest-scoring answer in the search results page and provide the search results page in response to the query.

Final Takeaways on Answer Seeking Queries

This patent does provide some additional aspects of how the process described in this patent works and states that it may also involve other steps that aren’t necessarily covered.

I have pointed to at least one other patent (the one on how-to queries), that describe more aspects of how an answer may be chosen that isn’t detailed in this patent as well.

So, it makes sense to look at other patents that are cover additional aspects of responding to queries that focus specifically on seeking answers, like the ones I linked to above about natural language answers and responding to queries using answer passages.

I also wrote about how Google might generate knowledge graphs in response to queries, and consider association scores between entities and classifications and attributes of those entities to answer questions in the post Answering Questions Using Knowledge Graphs.

We have no clear guidance on how the approaches in the different patents that those posts are about may fit together, but be aware that they exist and should possibly be considered in determining how everything fits together when Google may respond to answer-seeking queries may be helpful.

About Bill Slawski

MORE TO EXPLORE

Related Insights

More advice and inspiration from our blog

Standard Shopping vs Performance Max: When to Use Each in Google Ads

Performance Max is powerful, but not always the right fit. Learn...

Brian ONeil| October 01, 2025

Negative Keywords in Google Ads: The Key to Scalable Campaigns

Learn how negative keywords improve Google Ads performance. Build master lists,...

Broad Match Google Ads: Benefits, Best Practices, and AI Visibility

Broad match in Google Ads has evolved. Learn best practices to...

Thomas Delsignore| September 19, 2025