Table of Contents

What Is Crawl Depth?

Crawl depth, or click depth, refers to the number of links that a bot has to crawl through, or the number of clicks it takes for a user to land on a particular page of your website. The homepage is generally the starting point. Pages linked directly from the homepage are considered the second level. Those pages link to other pages and the site continues to branch out from there.

Related Content:

- SaaS SEO Agency

- Technical SEO Agency

- Ecommerce SEO Agency

- Shopify SEO Services

- Enterprise SEO Services

Why Should We Care About Crawl Depth?

We care about crawl depth because we care about user experience, as well as making sure your most important content is able to be crawled and indexed by search engines in a timely manner.

User Experience

On the user experience side, imagine you are a user who lands on the homepage of a website for the very first time and then tries to navigate to a specific product page. If it’s your first time trying to navigate through a site and the product you are looking for is several clicks away from the homepage, you may get frustrated or confused along the way and end up leaving the site. Having a well defined, clear and logical path with an appropriate number of pages for users to navigate through is ideal for user experience, and may also improve conversion rate.

Getting Important Content Crawled & Indexed Quickly

The deeper a page lives within the hierarchy of your site, the less important it may appear to search engines and therefore those pages may not be crawled as often. There are work-arounds to this, like manually submitting a page in Google Search Console and requesting that it be crawled and indexed. Although, this is just a suggestion and doesn’t necessarily speed up the crawling and indexing of that page. One way you can help ensure that your most important content gets crawled often is to reduce its crawl depth and place the critical pages closer to the top of your overall site hierarchy.

How To Audit Crawl Depth With Screaming Frog

Process

Screaming Frog is a helpful tool for analyzing crawl depth. There are different ways you can use Screaming Frog for this type of review, but the following steps are a simple method to gain insights:

-

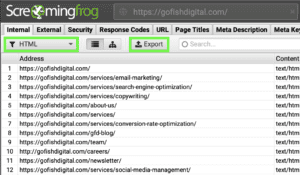

- Crawl the site with Screaming Frog starting from the homepage.

- In the “Internal” tab, filter by “HTML” and export the data to a spreadsheet.

- Filter the “Indexability” column and remove all non-indexable pages (since indexable pages are likely the only ones we care about users and search engines finding).

- Sort the “Crawl Depth” column in descending order, so pages with the largest crawl depth will appear at the top.

- Identify key pages of the site with a crawl depth greater than three.

- Determine ways to reduce crawl depth if necessary. Prioritize the most important pages.

Things to Consider

The “3-Click Rule”

SEOs commonly use the “3-Click Rule” when auditing crawl depth. The 3-Click Rule says that no page should be more than three clicks away from the homepage. This is not a perfect rule of thumb, however, and should only be used as a general guide to help identify potential problems. There are exceptions to the 3-Click Rule that should be considered.

Exceptions to the 3-Click Rule

Sometimes it just makes sense to have pages that are more than three clicks away from the homepage. This is especially common with very large websites and paginated and Ecommerce sites. Ecommerce sites sometimes break up content into separate categories, subcategories, and product pages.

Here is how the navigation path to a product page may look on a large ecommerce site:

Homepage → Category Page → Subcategory 1 page → Subcategory 2 (sub-subcategory) page → Product page.

That’s four clicks! So is it a problem? Not necessarily, as long as the path makes logical sense and is justified.

My general advice is to start on the homepage and manually navigate through the pages until you reach the destination. This can help you determine if the path makes sense and is easy for users to follow. If so, then you should be okay to leave it as is.

Tip: You could review your conversion path in Google Analytics to see if any page has a larger exit rate. If so, users could be leaving the site because they are frustrated by the amount of clicks they have to go through to get to a product page or conversion. If that seems to be the case, you may want to look for ways to consolidate pages along the path to reduce crawl depth and make it more efficient for users.

Ways to Reduce Crawl Depth

Add Internal Links to Important Pages Within Your Content

If an important page lives deep within your site, you can reduce the click depth by linking directly to it from one of the pages in the very beginning of your site’s hierarchy. For example, you could link to a popular product page within the content of your homepage. This reduces the click depth to just two since the homepage is considered one click.

Update Your Navigation Menu

It’s possible to reduce the number of clicks required to reach certain pages of your site by changing your navigation or adding new types of navigation menus. Very large Ecommerce sites may benefit from using multiple dropdown menus or even a mega menu, to ensure pages that are very deep in the site can be quickly and easily accessed directly through the navigation menu.

There are many different types of navigation menus. The best type for your site will depend on a number of factors, such as the number of pages you have, industry, CMS limitations, etc. Some of the most common types include:

- Single-Bar Navigation

- Double-Bar Navigation

- Secondary Dropdown Menus

- Tertiary Dropdown Menus

- Mega Menus

- Side-Bar Menus

- Footer Navigation

Here are some examples of what some of these menus look like:

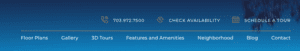

Single-Bar Navigation

Double-Bar Navigation

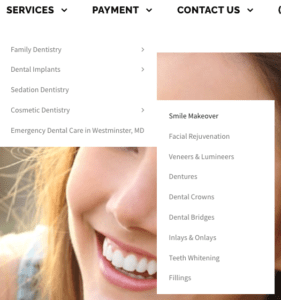

Secondary & Tertiary Dropdown Menus

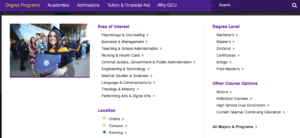

Mega Menu

Keep in mind that each type of navigation menu has pros and cons. When choosing which navigation menu to use, you should carefully research competitors in your industry and test different options if possible. Also, be sure to research ideal implementation methods for your selected navigation menu. This is very important because if implemented incorrectly, Google may not be able to crawl through the links to find all of the pages on your site.

Link to an HTML Sitemap

Creating an HTML sitemap and linking to it in the footer of your site can greatly reduce crawl depth. A sitemap is basically a list of links to all of the important pages on your site. Linking to your sitemap in the footer is helpful because the footer is typically accessible on every page. This means that all pages would link to a page (aka the sitemap) that links directly to all of the other pages on your site.

Conclusion

An optimized crawl depth can boost user experience and help ensure your most important content gets crawled often and indexed quickly. When auditing your crawl depth, don’t forget to check-in with your gut. If a navigation path feels long or challenging that’s a good sign there’s an opportunity for improvement. There are several options to help reduce crawl depth. Do your research and choose the option(s) that works best for you and your website.

Search News Straight To Your Inbox

*Required

Join thousands of marketers to get the best search news in under 5 minutes. Get resources, tips and more with The Splash newsletter: