Table of Contents

Ranking Passages at Google

The Google I/O Developer’s conference was called off this year, and a SearchOn2020 presentation from the company was held instead. Several announcements were made about new features at Google, many of which were described in a blog post on the Google blog on How AI is powering a more helpful Google. In that post, we were told about how Google may be ranking passages soon:

Passages

Precise searches can be the hardest to get right since sometimes the single sentence that answers your question might be buried deep in a web page. We’ve recently made a breakthrough in ranking and cannot just index web pages but individual passages from the pages. By better understanding the relevancy of specific passages, not just the overall page, we can find that needle-in-a-haystack information you’re looking for. This technology will improve 7 percent of search queries across all languages as we roll it out globally. With new passage understanding capabilities, Google can understand that the specific passage (R) is a lot more relevant to a specific query than a broader page on that topic (L).

Google has told us that Passages and Featured Snippets are different, but the example of a passage that Google shows on their blog post about passages looks exactly like a featured snippet. We are uncertain what the differences between the two may be, and we have received information, as Barry Schwartz has contacted Google about such differences. He wrote about them in Google: Passage Indexing vs. Featured Snippets.

Related Content:

I have been writing about several patents from Google which have the phrase “answer passages” in their titles. I have referred to these answer passages as featured snippets, and I believe that they are. However, it doesn’t seem very clear how much the passages that Google is writing about are different from featured snippets. The chances are that we will be provided with more details within the next couple of months. In addition, we hear from Google how they process passages can result in them understanding content that they find on pages better.

One of these patents that I wrote covered an updated version of one of those patents and focused on the changes between the first version of the patent and the newer version. Still, I didn’t focus on the process described in that patent in detail and provide a breakdown of it. It has much information on how answer passages are selected on pages and whether they use structured or unstructured data in answer passages.

After hearing of Google’s plans to focus more on ranking passages, it made sense to spend more time on this Google about answer passages titled Scoring candidate answer passages.

Before then, I would like to provide links to some of the other posts I’ve written about Google patents involving answer passages.

Google Patents about Answer Passages

When I first came across these patents, I attempted to put them into context. It appeared that they were aimed at providing answers to queries that asked questions and responded to those questions with textual passages that were more than just facts that were responsive to question-queries. They all tend to describe being used in that way as answers that could be shown in an answer box when Google returns a set of search results for a query, which might be placed at the start of a set of organic search results.

These are the patents and posts that I wrote about them.

Candidate Answer Passages. This is a look at what answer passages are, how they are scored, and whether they are taken from structured or unstructured data. My post about this patent was Does Google Use Schema to Write Answer Passages for Featured Snippets?

Scoring Candidate Answer Passages. This post is a recent update to a patent about how answer passages are scored. The updated claims in the patent emphasize that both query dependent and query independent signals are used to score answer passages. This updated version of this patent was granted on September 22, 2020. The post I wrote about this update was Featured Snippet Answer Scores Ranking Signals. I focused on the query dependent and query independent of how the process behind this patent worked.

Context Scoring Adjustments for Answer Passages This patent tells us about how answer scores for answer passages might be adjusted based on the context of where they are located on a page – it goes beyond what is contained in the text of an answer to what the page title and headings on the page tell us about the context of an answer. May post based on this patent was Adjusting Featured Snippet Answers by Context.

Weighted answer terms for scoring answer passages was the last post I wrote about answer passages. It was granted in July of 2018. It tells us how it might find questions from the query on pages and textual answers to answer passages. My post about it was Weighted Answer Terms for Scoring Answer Passages

When I wrote about the second of the patents I listed, I wrote about the updated version of the patent. So, instead of breaking down the whole patent, I focused on how the update told us about it being about more than query-dependent ranking signals and that query independent ranking signals were also important.

But, the patent provides more details on what answer passages are. After reviewing it again, I felt it was worth filling out those details, especially in light of Google telling us that what they have written about passages may have more importance in understanding content on pages.

The process Google might use to answer question-seeking queries may enable Google to understand the meaning of the content that it is indexing on pages. Let’s break down “Scoring Candidate Answer Passages” and see how it adds the creation of answer passages and the criteria for unstructured and structured answer passages (possibly the most detailed list of such criteria I have seen.)

Scoring Candidate Answer Passages

At the heart of these answer passages patents are sentences like these:

Users of search systems often search for an answer to a specific question rather than a listing of resources. For example, users may want to know what the weather is in a particular location, a current quote for a stock, the capital of a state, etc. When queries in the form of a question are received, some search engines may perform specialized search operations in response to the question format of the query. For example, some search engines may provide information responsive to such queries in the form of an “answer,” such as information provided in the form of a “one box” to a question.

Some question queries are better served by explanatory answers, which are also referred to as “long answers” or “answer passages.” For example, for the question query [why is the sky blue], an answer explaining Rayleigh scatter is helpful. Such answer passages can be selected from resources that include text, such as paragraphs relevant to the question and the answer. Sections of the text are scored, and the section with the best score is selected as an answer.

The patent tells us that it follows a process that involves finding candidate answer passages from many resources on the web. Those answer passages contain terms from the query that identify them as potential answer responses. They meet a query term match score that is a measure of similarity of the query terms to the candidate answer passage. This Scoring Candidate Answer Passages patent provides information about how Answer passages are generated.

The patent also discusses a query dependent score for each candidate answer passage based on an answer term match score (a measure of similarity of answer terms to the candidate answer passage) and a query independent score.

We are told that one advantage of following the process from this patent is that candidate answer passages can be generated from both structured content and unstructured content – answers can be prose-type explanations and can also be a combination of prose-type and factual information, which may be highly relevant to the user’s informational need.

Also, query dependent and query independent signals are used (and I wrote about this aspect of this patent in my last post this patent). The query-dependent signals may be weighted based on the set of most relevant resources, which may tend to answer passages that are more relevant than passages that are scored on a larger corpus of resources. Because they are based on a combination of query dependent and query independent signals can reduce processing requirements and more easily facilitates a scoring analysis at query time.

This patent can be found at:

Scoring candidate answer passages

Inventors: Steven D. Baker, Srinivasan Venkatachary, Robert Andrew Brennan, Per Bjornsson, Yi Liu, Hadar Shemtov, Massimiliano Ciaramita, and Ioannis Tsochantaridis

Assignee: Google LLC

US Patent: 10,783,156

Granted: September 22, 2020

Filed: February 22, 2018

Abstract

Methods, systems, and apparatus, including computer programs encoded on a computer storage medium, for scoring candidate answer passages. In one aspect, a method includes receiving a query determined to be a question query that seeks an answer response and data identifying resources determined to be responsive to the query; for a subset of the resources: receiving candidate answer passages; determining, for each candidate answer passage, a query term match score that is a measure of similarity of the query terms to the candidate answer passage; determining, for each candidate answer passage, an answer term match score that is a measure of similarity of answer terms to the candidate answer passage; determining, for each candidate answer passage, a query dependent score based on the query term match score and the answer term match score; and generating an answer score that is a based on the query dependent score.

Generating Answer Passages

This answer passage process starts with a query that is a question query that seeks an answer response and data identifying resources that are responsive to the query.

The answer passage generator receives a query processed by the search engine with data showing responsive results. Those results are ranked based on search scores generated by the search engine.

The answer passage process identifies passages in resources.

Passages can be:

- A complete sentence

- A portion of a sentence,

- A header, or content of structured data

- A list entry

- A cell value

As an example, passages could be headers and sentences, and list entries.

A number of appropriate processes may be used to identify passages, such as:

- Sentence detection

- Mark-up language tag detection

- Etc.

Passage Selection Criteria

The answer passage process applies a set of passage selection criteria for passages.

Each passage selection criterion specifies a condition to be included as a passage in a candidate answer passage.

Passage selection criteria may apply to structured content and then also to unstructured content.

Unstructured content is content displayed in the form of text passages, such as an article, and is not arranged according to a particular visual structure that emphasizes relations among data attributes.

Structured content is content displayed to emphasize relations among data attributes, such as lists, tables, and the like.

While a resource may be structured using mark-up language (like HTML), the usage of the terms “structured content” and “unstructured content” refers to the visual formatting of content for rendering and concerning whether the arrangement of the rendered content is following a set of related attributes (e.g., attributes defined by row and column types in a table, and where the content is listed in various cells of the table.)

An answer passage generator generates, from passages that satisfy the set of passage selection criteria, a set of candidate answer passages.

Each of the candidate answer passages is eligible to be provided as an answer passage in search results that identify the resources that have been determined to be responsive to the query but are shown separate and distinct from the other search results, as in an “answer box.”

After answer passages are generated, an answer passage scorer is used to score each passage.

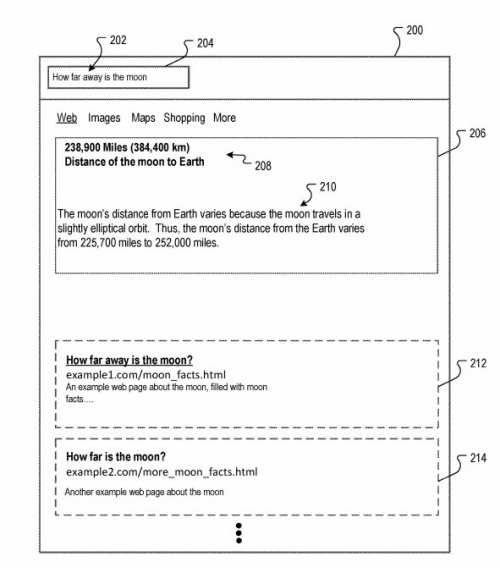

An Answer passage may be generated from unstructured content. That means the answer might be textual content from a page, like in this drawing from the patent, showing a question with a written answer:

That answer passage is created from unstructured content, based on a query [How far away is the moon].

The query question processor identifies the query as a question query and also identifies the answer 208 “289,900 Miles (364,400 km).”

The answer box includes the answer.

The answer box also includes an answer passage that has been generated and selected by the answer passage generator and the answer passage scorer.

The answer passage is one of several answer passages processed by the answer passage generator and the answer passage scorer.

Search results are also provided on the search results page. The search results are separate and distinct from the answer passage that shows up in the answer box.

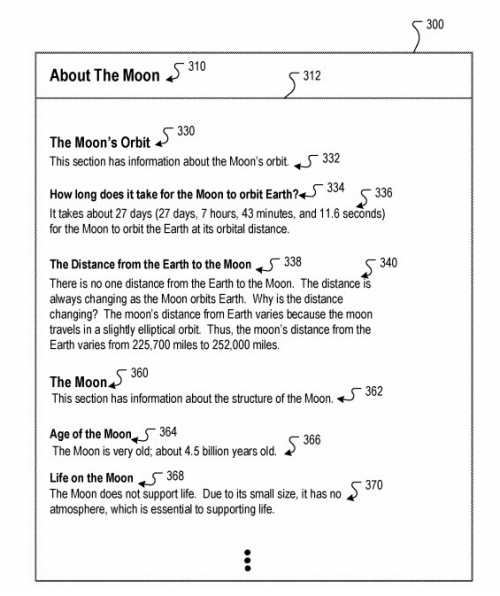

The patent shows a drawing of an example page from where the answer passage has been taken.

This web page resource is one of the top-ranked resources responsive to the query [How far away is the moon] and was made by the answer passage generator, which generates multiple candidate answer passages from the content of ranking resources. This particular resource includes multiple headings.

Headings have respective corresponding text sections that are subordinate. I wrote about how headings can give answer passages context and be used to adjust the scores of passages in the post Adjusting Featured Snippet Answers by Context. The post I wrote about the patent I covered in that post told us about how headings can adjust rankings of answer passages based on context. And the title for the page (About the Moon) is considered a root heading, which

On this example page are many example passages, and the patent points a few of those out as Candidate answer passages that might be used to answer a query about the distance from the Earth to the Moon:

(1) It takes about 27 days (27 days, 7 hours, 43 minutes, and 11.6 seconds) for the Moon to orbit the Earth at its orbital distance.

(2) Why is the distance changing? The moon’s distance from Earth varies because the moon travels in a slightly elliptical orbit. Thus, the moon’s distance from the Earth varies from 225,700 miles to 252,000 miles.

(3) The moon’s distance from Earth varies because the moon travels in a slightly elliptical orbit. Thus, the moon’s distance from the Earth varies from 225,700 miles to 252,000 miles.

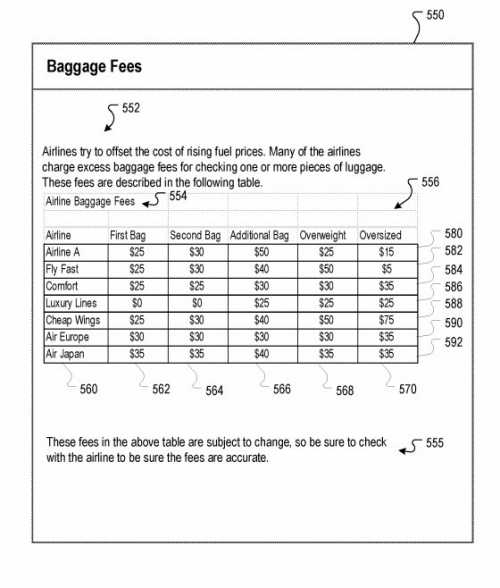

The patent also tells us about answer passages that are made from structured content. The patent provides an example drawing of one that uses a table to answer a question:

The resource it is taken from includes the unstructured content and the table.

The patent points out that the table includes both columns and rows, expressing data the shows the relationship between airlines and baggage fees in terms of prices.

Structured and Unstructured Content Criteria for Answer Passages

We have seen that both structured data and unstructured data can be used in answer passages. The patent describes the criteria that are considered when creating those passages. The drawing above shows passages using unstructured data and structured data being chosen as candidate answer passages

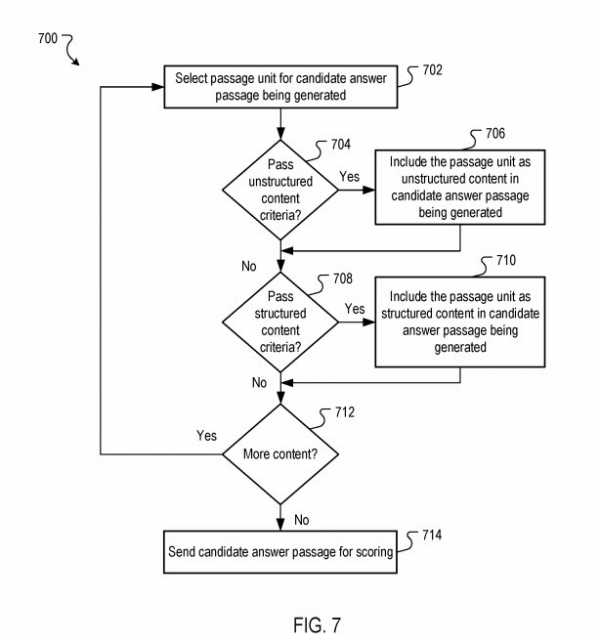

An answer passage generator implements the process.

It begins with the selection of a passage for a candidate answer passage that is being generated.

For example, on the page above about the distance from the Earth to the Moon, a header or a sentence may be selected.

The type of passage selected – whether structured content or unstructured content may determine what types of criteria are applied to it.

Passages that use Unstructured Content Criteria

The following are some of the things that may be looked for when deciding on unstructured content criteria.

A Sentence Score indicates whether a passage is a complete sentence.

If it is not a complete sentence, it may be omitted as a candidate answer passage, or more content may be added to the passage until a complete sentence is detected.

A minimum number of words is also required when deciding on unstructured content criteria.

If a passage does not have a minimum number of words, it may not be chosen as a candidate answer passage, or more content may be added to the passage until the minimum number of words is reached in the passage.

Visibility of the content is also required when deciding on unstructured content criteria.

This visibility of content criterion may also be used for a passage using structured content.

If the content is the text that, when rendered, is invisible to a user, then it is chosen as a candidate answer passage.

That content can be processed to see if visibility tags would cause it to be visible and be considered a candidate answer passage.

Boilerplate detection may also cause a passage not be be considered.

Google may decide not to show boilerplate content as an answer passage in response to a query question.

This boilerplate criterion may also be used for structured content.

If the content is text determined to be boilerplate, it would not be included in a candidate answer passage.

Several appropriate boilerplate detection processes can be used.

Alignment detection is another unstructured content criterion reviewed

If the content is aligned so that it is not next to or near other content already in the candidate answer passage (like two sentences that are separated by other sentences or a block of whitespace), it would not be included in the candidate answer passage.

Subordinate text detection would be another type of unstructured content criterion used

Only text subordinate to a particular heading may be included in a candidate answer passage (the heading wouldn’t apply to it otherwise.)

Limiting a heading to only be a first sentence in a passage would be an unstructured content criterion used.

Image caption detection would also be an unstructured content criterion used.

A passage unit that is an image caption should not be combined with other passage units in a candidate answer passage.

Using structured content, and subsequent unstructured content added to it in a candidate answer passage would be an unstructured content criterion used.

If a candidate answer passage has a row from the table, then the unstructured content “555” cannot be added to follow the row in the candidate answer passage.

Additional Types of criteria for unstructured data can include:

- A maximum size of a candidate answer passage

- Exclusion of anchor text in a candidate answer passage

- Etc.

If the process determines that the passage passes the unstructured content criteria, then the passage generation process includes the passage as unstructured content in a candidate answer passage being generated.

Passages that use Structured Content Criteria

If a passage does not meet unstructured passage criteria, then the answer passage process determines whether the passage unit passes structured content criteria.

Some structured content criteria may be applied when only structured content is included in the answer passage. Some structured content criteria may be applied only when there is structured and unstructured content in the answer passage.

Incremental list generation is one type of structured content criterion.

Passages are iteratively selected from the structured content. Only one passage unit from each relational attribute is selected before any second passage unit from a relational attribute is selected.

This iterative selection may continue until a termination condition is met.

For example, when generating the candidate answer passage from a list, the answer passage generator may only select one passage from each list element (e.g., one sentence.)

This ensures that a complete list is more likely to be generated as a candidate answer passage.

Additional sentences are not included because a termination condition (e.g., a maximum size) was met, thus precluding the inclusion of the second sentence of the first list element.

Generally, in shortlists, the second sentence of a multi-sentence list element is less informative than the first sentence. Thus emphasis is on generating the list in order of sentence precedence for each list element.

Inclusion of all steps in a step list is another type of structured content criterion.

If the answer passage generator detects structured data that defines a set of steps (e.g., by detecting preferential ordering terms), all steps are included in the candidate answer passage.

Examples of such preferential ordering terms are terms that imply order steps, such as “steps,” or “first,” “second,” etc.

If a preferential ordering term is detected, all steps from the structured content need to be included in the candidate answer passage.

If including all steps exceeds a maximum passage size, then that candidate answer passage is discarded.

Otherwise, the maximum passage size can be ignored for that candidate answer passage.

Superlative ordering is another type of structured content criterion.

When the candidate answer passage generator detects a superlative query in which a query inquires of superlatives defined by an attribute, the candidate answer passage generator selects, from the structured content for inclusion in the candidate answer passage, a subset of passages in descending ordinal rank according to the attribute.

For example, for the query [longest bridges in the world], a resource with a table listing the 100 longest bridges may be identified.

The candidate answer passage generator may select the rows for the three longest bridges.

Likewise, if the query were [countries with smallest populations], a resource with a table listing the 10 smallest countries may be identified.

The candidate answer passage generator may select the rows for the countries with the three smallest populations.

Informational question query detection is another type of structured content criterion.

When the candidate answer passage generator detects an information question query in which a query requests information set for many attributes, the candidate answer passage generator may select the entire set of structured content if the entire set can be provided as an answer passage.

For example, for the query [nutritional information for Brand X breakfast cereal], a resource with a table listing the nutritional information of that cereal may be identified.

The candidate answer passage generator may select the entire table to include in the candidate answer passage.

Entity attribute query detection is another type of structured content criterion.

When the candidate answer passage generator detects that a question query is requesting an attribute of a particular entity or a defined set of entities, a passage that includes an attribute value of the attribute of the particular entity or the defined set of entities is selected.

As an example, for the question query [calcium nutrition information for Brand X breakfast cereal], the candidate answer passage generator may select only the attribute values of the table that describe the calcium information for the breakfast cereal.

Key value pair detection is another type of structured content criterion.

When the structured content includes enumerated key-value pairs, then each passage must include a complete key-value pair.

Additional Types of criteria for unstructured data can include:

- A maximum size of a candidate answer passage

- Exclusion of anchor text in a candidate answer passage

- Etc.

If this process determines the passage passes the structured content criteria, then the process includes the passage as structured content in candidate answer passages being generated.

If the process determines the passage does not pass the structured content criteria, the process may then determine if more content is to be processed for the candidate answer passage.

If there isn’t more content to be processed, then the process sends the candidate answer passages to the answer passage scorer.

Query Dependent and Query Independent Ranking Signals

I have described how candidate answer passages may be selected. Once they are, they need to be scored. In the post I wrote about the update of this patent Featured Snippet Answer Scores Ranking Signals, I wrote about the query dependent and query independent ranking signals that this patent had described in detail. I wanted to write about the other details from this patent that I hadn’t covered in that post.

Other patents I have written about involving answer passages describe the scoring process and adjusting those scores based on the context of headings on the resources those passages are found upon.

Search News Straight To Your Inbox

*Required

Join thousands of marketers to get the best search news in under 5 minutes. Get resources, tips and more with The Splash newsletter: